AI Is Moving Fast. Local Government Can’t Be Left Behind.

Closing the Readiness Gap in Public Sector AI Adoption

Before getting to the content today, I have two quick housekeeping notes to share.

First Note: Moving forward, I will be posting here on a more scheduled format. I am doing this to give my readers a better sense of what they can expect, so that you can pinpoint the topics and areas that are most important to you. I also hope that this format will engender more feedback from readers and allow me to hit the topics you care most about.

Here’s the schedule:

Mondays - Weekly News Recap and/or Analysis: There is so much news in the AI in education field, not to mention AI in general, that is almost impossible to keep up. On Mondays, I’ll aim to either post a short roundup of the news of the week and/or a deeper analytical dive into one key story for the week. If you have specific news items you would like me to cover and/or include, please let me know in the comments, send me a message here on Substack, or shoot me an email at mike@litpartners.ai

Wednesdays - Curriculum, Courses, and Products: We’re rolling out a new curriculum design service at AI Literacy Partners, and I’ll aim to share the lesson plans as we finish them. I’ll also share other products like “The 12-Step Program to AI Literacy,” “The AI Driver’s License for Faculty,” “The AI Decision Matrix,” and more. As always, if you have feedback or ideas, let me know.

Fridays - Guest Posts, Conceptual Frameworks, Research Analysis, and “Big Picture Thinking”: In these posts, I’ll aim to share my opinions on where I think we are going, the mental maps and strategies we can use to address problems and opportunities around AI, and more. I’ll also be posting guest articles from different stakeholders in the education space on a wide variety of topics. If you have an idea for a guest post you would like to write for AI EduPathways, reach out!

Disclaimer: This does not mean I will be posting 3x a week. Sometimes I will, sometimes I won’t. Sometimes I may not post at all. However, I will aim to bucket each piece into its relevant publishing schedule so that you know what to expect. Hopefully, this allows for a more streamlined reader experience.

Thank you for being a reader and supporter. If you’ve found value in these insights, consider becoming a paid subscriber. Your support helps me continue developing practical tools and resources—and stay free from the influence of major platforms and organizations. It allows me to share an honest, educator’s perspective on AI without the sales pitch.

Second Housekeeping Note: I wrote this post several weeks ago and scheduled it for today. Then, on Wednesday, OpenAI announced it is providing ChatGPT Enterprise to all federal employees for $1 per agency. This is a big news item that deserves analysis, but it will require more time than I currently have at my disposal to digest and analyze. However, rather than ditch the article completely, I’ve tried to filter in some snap analysis of this development without tossing the original narrative — which I believe very much still applies. Going forward, I aim to keep closer tabs on AI-Government training and partnerships and execute a deeper dive in the future. If you have any tips or recommendations for coverage in this field, please let me know.

Enjoy, and thank you for being a loyal subscriber.

The Neglected Corner of AI Training

The majority of focus around AI training, in my experience, has focused on corporate and school institution training. Companies are hustling to jam GenAI into everything they do, often times without a roadmap or any clear vision - and their efforts are creating more headlines than anyone can keep up with.

Education is the same. It is receiving just as much attention, if not more, than the corporate world. And K-12 schools and universities are taking note - the demand for AI training has skyrocketed over the last year.

But behind the narratives of both sectors lies an uncomfortable truth: government employees need training too. In many cases, they are often flying blind in their efforts to catch up.

The profile of risks and benefits of GenAI use in their work are nuanced and complex, and yet few local governments are receiving the funding or support to emphasize clarity around AI use that would effectuate meaningful change.

OpenAI’s offering of ChatGPT to federal agencies for the cost of $1 per agency this week is certainly a positive but does not extend to local governments — underscoring a reality that is not receiving nearly enough press.

This crystallized for me last month when I led a three-hour training for 125 city clerks in the state of Florida through the Florida Association of City Clerks.

What follows is a description of their perspective and an overview of the market. And it’s a sector that affects us all - so we should perk up and pay more attention to this space as the technology develops.

Numbers That Jump Off The Page

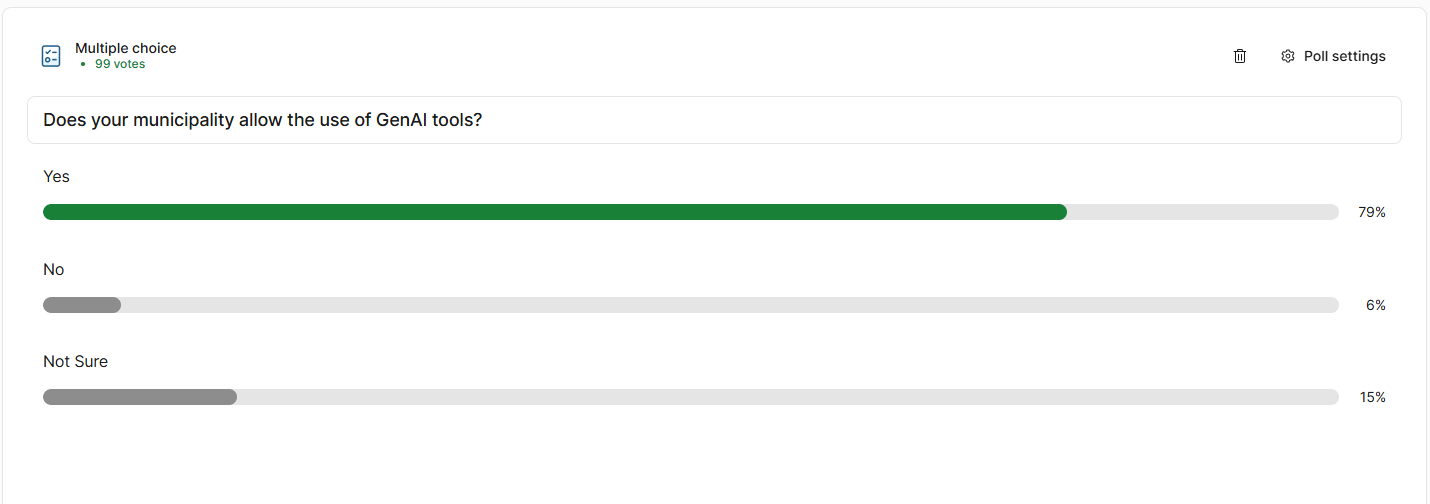

During the training sessions with Florida clerks, I polled participants on a series of questions to elicit a snapshot of their experience and opinions. Here's what nearly 100 city clerks said about AI in their workplaces:

79% are allowed to use generative AI tools in their work.

Just 2 out of 100 indicated their municipality has any policy regarding the use of AI.

Two. Out of 100.

Take a moment to absorb this. Almost 8 in 10 city clerks can deploy AI but less than 2% receive advice on doing so securely, ethically, or effectively.

It is certainly understandable that local governments have not developed robust policies. But training is the first step - and as I’ve seen in my primary work in the education field, training and cautious experimentation at the grassroots level are the most effective first step towards policy creation. It is through on-the-ground exploration that organizations gain visibility into the type of policies that should govern employee use of the systems.

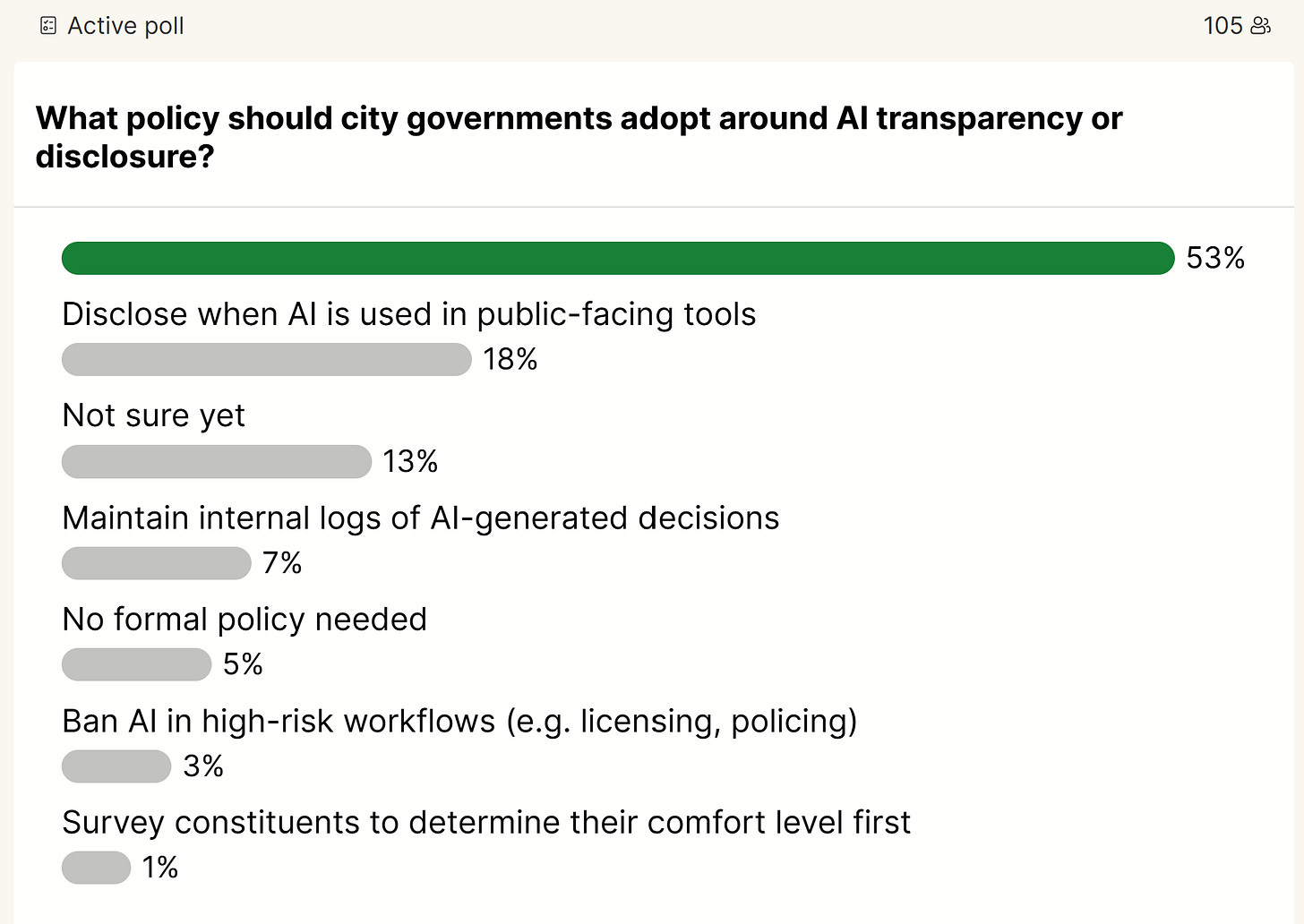

Separately, an audience member encouraged me to ask whether or not city clerks perceive that disclosure of AI use should be required to local citizens. So I asked the same group about transparency policies - should citizens be told if AI were deployed in government decision-making? Here’s what they had to say:

.

53% replied cities should be transparent about AI usage in public-facing applications, 18% were not yet sure, and 13% replied they should keep internal records of AI-based decisions. The remainder were divided between wishing for no formal policy (5%) or blanket prohibitions in high-risk areas (3%).

The most eye-opening point? When I spoke with individual clerks at breaks, most claimed they'd "taught themselves," had "never attended AI training," or "didn't know what ChatGPT was." These are the officials who keep public records, run elections, and deal with sensitive citizen data.

Why This Matters (Even If You're Not a Government Worker)

City clerks encompass everything from meeting minutes and municipal budgets to business licenses and public records requests. In small towns and rural settings, a single clerk can be the whole administrative foundation for local government. They're expected to be flawless, hardly ever thanked for their service, but now they're also expected to manage AI adoption with no training and little direction.

If you're an educator reading this, think about how overwhelmed you felt when AI exploded into your world. That feeling of "I should probably know how to use this, but where do I even start?" Now imagine having that same pressure, but instead of potentially messing up a lesson plan, you could accidentally violate public records laws or compromise citizen privacy.

They're in for the same AI overwhelm that struck teachers but with greater consequences and less assistance.

We're All Taking These Risks

Further, the impact of GenAI use in local government has even more direct impact on our daily lives than education, whose impacts tend to be felt on a longer timeline that is more difficult to measure. A quick scan of headlines in the space reveal that this lack of clarity - understandable as it may be - is filtering into our daily lives in ways that we cannot always see.

Security Nightmares: In April 2024 New York City’s “MyCity” business-help chatbot told entrepreneurs it was permissible to dock workers’ tips and ignore required schedule-change notices. The city officials defended the system, but these types of mishaps don’t inspire confidence. Boston’s CIO roll

Wasted Resources: Deloitte’s 2025 TMT forecast reports that only 30 % of generative-AI pilots ever make it into full production. The reason? Governance and talent gaps as the main culprits, they say. And Cohere’s May 2025 public-sector review reaches a similar conclusion, bluntly stating that “most government AI is stuck in pilot mode.”

Missed Opportunities: This is the sad part. As I interacted with clerks at the conference, I learned that their jobs are more taxing and thankless than I originally perceived. In this respect, GenAI could actually help these jobs be less demanding and more rewarding. But with no training, we put individuals in the position of fearing what can actually assist them in serving the public better.

As an example, OpenAI’s release this week shared several examples of this type of beneficial GenAI usage. “In a recent pilot program, Commonwealth of Pennsylvania employees using ChatGPT saved an average of about 95 minutes per day on routine tasks. In North Carolina, 85% of participants in a separate 12-week pilot with the Department of State Treasurer reported a positive experience with ChatGPT.”

What's Happening at Higher Levels

The good news is that the federal government is pushing more investment into the space. The Partnership for Public Service invested $10 million in a new Center for Federal AI which opened in March 2025. I look forward to seeing where their work leads.

The General Service Administration ran a course series that registered12,000 government workers and earned a 94% satisfaction rate. Another good sign, but consider how many workers missed their opportunity to engage through a lack of funding or time.

And, there is a more subtle concern in this data. A high satisfaction rate may be a product of false positives — many people’s first exposure to GenAI leads to “wow” moments that cloud the actual effectiveness of learning experiences.

On another positive note, this week’s $1 deal between OpenAI and federal agencies includes access to all frontier models for one year, with an additional 60-day period of access to advanced featured like Deep Research and Advanced Voice Mode. OpenAI is also pledging the creation of a dedicated government user community and tailored introductory training through the OpenAI Academy. They are also partnering with Boston Consulting Group and Slalom to aid the efforts.

Last, they are offering the safeguarding of government inputs and outputs in the same manner that they currently offer to businesses. GSA has also issued an “Authority to Use” for ChatGPT Enterprise, allowing agency employees with coverage for their GenAI usage.

It should be considered a good sign that agency employees are receiving low cost access to frontier tools and high-quality training. But what I’ve seen in education is that the best training occurs at the grassroots level. Safe and effective GenAI usage is nuanced, complex, and highly context-specific. Broad-scale efforts at GenAI training often create experiences laden with the aforementioned false positives and confirmation bias - as I saw with my students and have continued to see with novice adult users.

Moving the needle in this space will require concerted exploration, hands-on training, and collaboration across agencies and local municipalities to ensure that equity gaps do not widen and employees are able to access benefits without developing dangerous usage patterns. That’s why federal level training is only a drop in the bucket when you consider the uphill climb facing local municipalities.

The Local Bright Spots

On a local level, a handful of municipalities have taken meaningful steps towards thoughtful integration that are worth sharing. My hope is that these examples serve as models for city and county agencies around the country as they seek to understand GenAI’s role in their work.

Boise, Idaho implemented an "AI ambassador" program wherein volunteers spread word throughout the departments. Their adoption of AI spiked 10 times because it wasn't a high-management imposed mandate from headquarters. As humans, we are a social species. We learn from each other. This type of dissemination of GenAI learning is the right way to impact real, positive change.

Wentzville, Missouri mandates in-person and virtual training for personnel with generative AI. The aspect that sticks out here is the in-person approach. I’ve done countless webinars in this space and can definitively state that it pales in comparison to the pro-social approach of convening small and large groups of people in a room together.

To the extent that it’s possible, local governments should push for as much in-person learning and collaboration as possible. This is the formula for effecting meaningful gains. However, if they are not provided support with respect to funding, time, and guidance - these collaborative learning sessions cannot happen at any meaningful scale.

If You’re Running a Training…

Many local municipalities are running trainings in-house. If you are a local official tasked with training your members, I wanted to share some advice gleaned from my experience training over 2,000 GenAI users over the last year.

Begin with Why: Don't start with algorithms. Start with "here's the way this will help you - and here’s why we need to spend a concerted level of energy learning effective use habits.” For example, when I demonstrated clerks how meeting summaries could be assisted with AI - one of the most tedious parts of their job — you could see the light bulbs turn on. But they also appreciated understanding that there was more to using AI well than just pressing a button, and expressed an appetite for further nuanced learning.

Show, Don't Tell - And Then Engage: Learners need an opportunity to experiment on their own, share, and ask questions. Don’t fall into the “Sage on the Stage” trap. After layering in motivation and meaning, create opportunities for “students” to draw their own conclusions. You might be surprised at what surfaces.

Acknowledge the Fear, Risks, and Dangers: No one likes an AI Hype Person. We’ve all been watching the news enough over the last two-three years to understand that AI can do cool things. Instead, layer in discussions about the risks and dangers with true, meaningful acknowledgement — rather than brushing past it. I can’t tell you how many times I’ve been told after a workshop that the members appreciated the balance between demonstrating the benefits and acknowledging the risks.

Make It Personal and Practical: Where I’ve failed in the past is in bringing examples to audience members that did not speak to their domain. If you are planning a training session, make sure you understand your audience’s day-to-day work — and then translate the meaningful GenAI use cases into their field. This not only speaks to the human themselves but also creates visibility into the risks and benefits. As a student, if I can’t understand the example that is provided, then I can’t apply it in my own work. That’s where growth stalls.

Last, try not to sell anything. That is sometimes easier said than done, as most people in training positions are inherently seeking to achieve a specific goal or sell a product. But the truth is that the learning experience is diminished dramatically as soon as the audience perceives a grift. This is the same as teaching K-12 or University students. The moment a student thinks the teacher is trying to sell an idea rather than allow the individual to draw their own conclusions, the learning stops. Don’t fall into that trap.

Call to Action

Most of the readership of this blog is in education, not local government. But every one of us is a citizen. And as citizens, we share responsibility for the health of our society and government.

So don’t leave this to the side. You carry more authority in ensuring it occurs than you realize. Use it:

Urge your local government for AI policies. These policies will likely have to remain broad, flexible, and abstract in the early days. That is okay, and it’s better than nothing. Some guidance is better than no guidance, even if policies come with the caveat that rules and guideposts might change or move.

Push for training for public employees. If only for the good of your neighbor, find ways to stand up for public employees. They wield an immense amount of unseen weight in our daily lives, for better or worse. Don’t anonymize them. Treat them like you would a colleague.

Support experimentation. This may seem counterintuitive given the risks outlined earlier in this article, but the truth is that policies need to be formed from the ground up - not top-down. Actual employees need an opportunity to test GenAI systems in low-stakes tasks and report back their findings to administrators with the power to set policy. When it comes to GenAI in 2025, this is the nature of the beast.

Those clerks I ran into in Florida want to serve their communities better. They want to learn. They just need someone who's going to actually teach them instead of expecting them to soak up things along the way on their own.

We are asking public officials to prepare for the revolution in AI with a paper map and a compass. It's time to provide them with appropriate education.

The key to an effective government's future isn't having the latest AI technology. It's having the kind of people who can be responsible with them. And it starts with treating government workers like professionals instead of an afterthought.

What is your local government doing with AI training? Have you raised the issue? Let me know in the comments, and if you know of better examples of AI training within government, I'd like to hear about them.

Great work, Mike!!!