AI Literacy is a Soft Skill

AI Literacy is too big, and an introduction to the role of the Humanities in LLM Literacy

This is the full version of an article posted two weeks ago on Michael Spencer’s AI Supremacy blog.

In the realm of modern buzzwords, few are as pervasive—and as misunderstood—as "AI Literacy." It’s the kind of term that gets tossed around in every meeting, every conference, every article that dares to touch on the future of technology. We hear it constantly: "You must learn AI," "AI won't replace you, but someone who knows how to use it will," and "We need proper AI training."

These statements, while repetitive, point to an emerging reality: the pressing need to define and teach AI Literacy. According to researchers at Georgia Tech, AI Literacy encompasses a range of competencies that enable individuals to critically evaluate AI technologies, collaborate with them effectively, and use them as tools in various aspects of life.

Yet, when you dig into the details, the concept starts to feel overwhelming. The infographic produced by these researchers attempts to capture the essence of AI Literacy, but instead of clarity, it offers a labyrinth of skills, competencies, and knowledge areas—each as intricate as the next.

The Problem of Scale

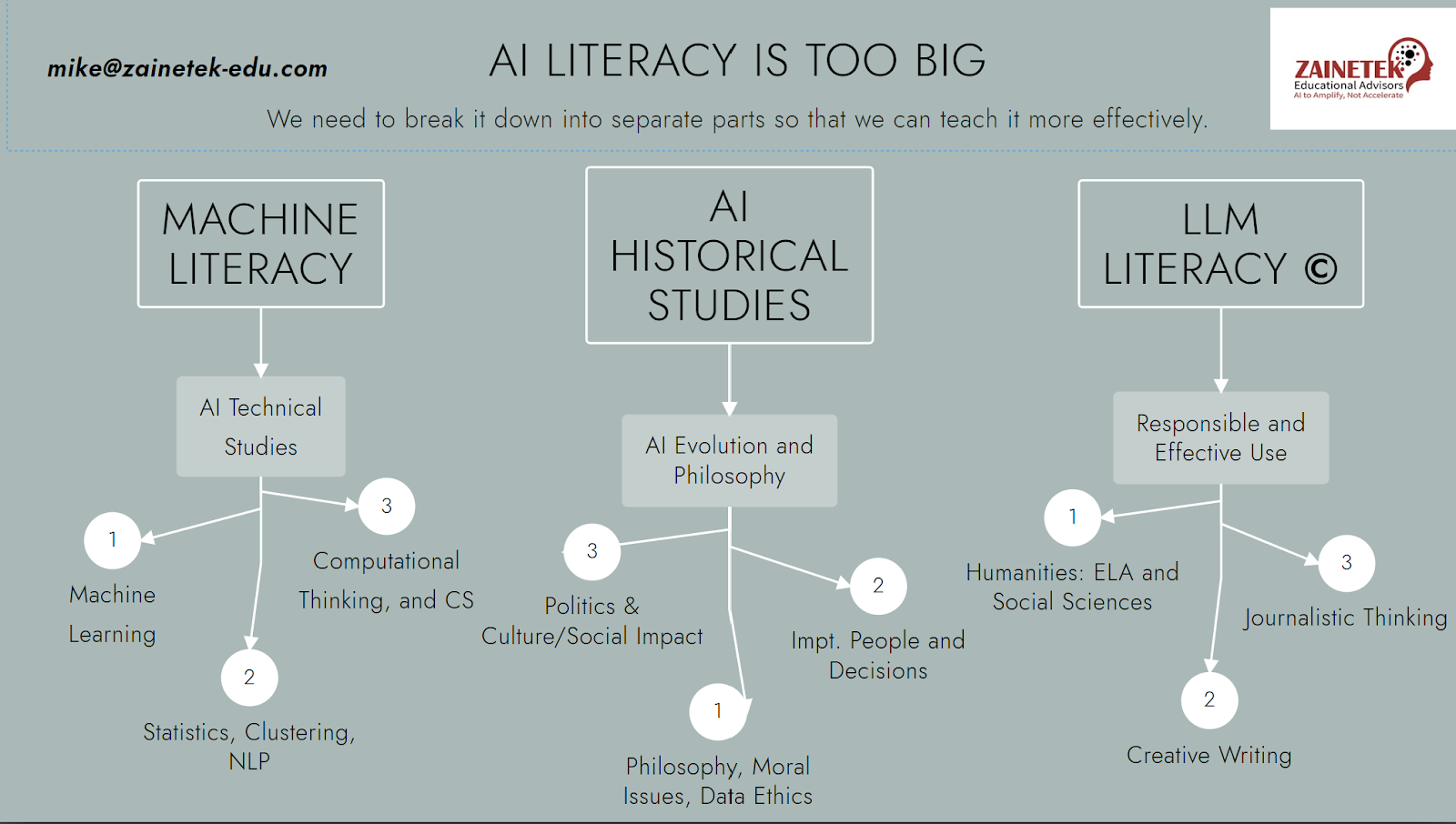

This complexity is why I’m writing this. AI Literacy, as it stands, is too vast, too vague to be practical. There is a pressing need to break it down into digestible pieces if we’re ever going to integrate it into education and society at large.

To understand this, consider the state of education today, where AI is either heralded as the ultimate learning tool or decried as the destroyer of critical thinking. Humanities teachers, in particular, find themselves on the defensive. Traditional assessments like essays are rapidly becoming obsolete, replaced by AI-generated content that can mimic student work with a concerning level of accuracy.

In the face of this shift, a new form of digital literacy is being called for—a literacy that encompasses the safe and effective use of AI. But over the past year, educators seeking to define the term and how to teach or embed it into the next generation of students have continued to run up against a major obstacle: The concept of AI Literacy is too broad, too ambiguous, to be effectively taught or learned.

Consider the European Union's AI Act, which mandates AI Literacy training for corporate professionals. The law's requirements are maddeningly unclear, demanding that staff possess "sufficient skills, knowledge, and understanding" of AI and its risks. But what does this mean? What skills? Which risks?

The Danger of Semantic Satiation

This is where AI Literacy stands today. It’s expansive and confusing nature has led to a backlash in education circles. Raise the term “AI Literacy” in a conversation with a University Professor and watch their eyes glaze over, roll, or even shut. To be honest, I am not sure I blame them.

Arvind Narayanan and Sayash Kapoor, a professor of computer science and a PhD Candidate at Princeton University, have pointed out that the term “AI” itself is overly broad, encompassing technologies with vastly different levels of competence. The same critique can be applied to AI Literacy: it’s too vast, too varied to be a single discipline.

As I see it, the blame for the current state of affairs lands in one of three areas. Either the education market has stuffed so many concepts under the umbrella of AI Literacy that it has become meaningless, it never had a clear definition to begin with, or it is a victim of semantic satiation — the phenomenon where repeated words lose their impact. In each case, the catch-all ambiguity of AI Literacy now serves to obscure more than it reveals.

The Path Forward: Narrowing the Focus

To move forward, we must break down AI Literacy into smaller, more manageable disciplines. These should align with existing educational frameworks, making them easier to teach and learn. I propose three subdivisions:

Machine Literacy: This is the study of the AI engine itself. It focuses on the underlying technology, employing statistical analysis and computational thinking to understand what’s happening beneath the surface.

AI Historical Studies: This discipline covers data ethics, history, and the cultural impact of AI. It asks the big questions: How did we get here? What will the future look like under AI’s influence?

LLM Literacy: The most crucial of all, LLM Literacy deals with Large Language Models—the AI tools most commonly used by the average person. Unlike Machine Literacy, which requires a deep understanding of AI's technical aspects, LLM Literacy is about practical interaction. It’s about knowing how to write, read, and critically assess the outputs of these models.

The Car Engine Analogy

To illustrate this, consider the analogy of a car engine. Do you need to understand how a car engine works to be a safe and effective driver? Not really. Sure, knowing the mechanics might make you slightly more tuned into your car's performance, but this knowledge is only applicable on the margins. Driving requires a wholly different set of skills from building, maintaining, or even understanding the engine itself.

Similarly, to use AI effectively, you don’t need to understand the intricacies of predictive analytics, machine learning, or neural networks. What you do need are the skills to engage with AI tools—especially LLMs—in a thoughtful and reflective manner.

Acknowledging this reality makes it easier to split AI skills across disciplines. To be clear, I am not arguing for an abandonment of the study of the engine. Only a realization; Studying the engine prepares you to be a mechanic, not a driver. In the context of AI Literacy, this allows for clean splits across disciplines, making the navigation and embedding of each skill – among adults and students – more navigable.

Said differently, you would not hire a mechanic to teach you how to drive. Our Driver’s Ed Instructors, rightly, do not discuss the engine with novice drivers. Instead, they focus on the rules of the road, the strategies for safe and responsible driving, and developing situational awareness in the driver-in-training.

In the above chart, you can see that I hand off the responsibility for teaching safe and effective driving of Large Language Models – specifically – to writers, journalists, and English teachers. People who have spent their entire lives studying words, language, and communication.

The same goes for understanding the ethics or history of AI. While it’s important to know that cars contribute to environmental degradation or that the oil industry has fueled global conflict, this knowledge doesn’t impact your ability to drive safely. In the same way, understanding AI's ethical implications – such as questions around Data Ethics and its impact on our humanity – is valuable, but it’s not essential to using AI effectively. They are different skills.

Mastering the Art of Engaging with Large Language Models

With respect to the utilization of Large Language Models, “literacy” must first acknowledge that its use is an art, and not a science. It leverages the skills of creative writing and journalistic thinking - since, in many ways, an engagement with an LLM mirrors an interview. Question, Answer, Question, Answer – with a broader aim in the mind of the questioner.

In that vein, we can more easily accept that the future of safe and effective use lies in the hands of the professionals most acquainted with the art of the written and spoken word. If you are working with an engine that produces language, with whom would you rather confer – a technologist or a writer?

Within that vein, the delivery of “LLM Literacy” can be broken down into a series of understandings that will allow us to shift the lens of safe and effective use towards a more Humanities-centered approach.

Step 1: Command the Power of Words

The very first move in this strategic dance with a Large Language Model (LLM) is the craft of writing—a question, a request, or even a simple statement. Some may dress this up with the title "prompt engineering," but this will soon be a relic of STEM-centered jargon that has been applied to a Humanities skill. Consider the masters of language throughout history—did they ever claim to "engineer" a thesis statement or a question? No. They wrote them. Language is an art form, and in this realm, you are an artist, not an engineer.

Step 2: The Mirror Reflects

When the LLM responds, it mirrors back to you in text form. While some models can create images or videos, this article focuses on models that produce text. Even in the case of image generators, the engine is still manipulated by words. And when an LLM produces text, it is via close reading that a user can determine its veracity, value, or utility.

Step 3: Decipher the Code

Our next realization should be that analyzing the outputs of an LLM is not about verifying facts alone; it is about evaluating value. When using the LLM as a brainstorming ally—a role where it thrives—it does not deal in absolutes. Ideas are neither true nor false; they are either valuable or worthless.

Our focus on hallucinations—when an AI fabricates a claim or a fact—is holding us back. In the evolving world of AI literacy, you must understand that your primary task is to evaluate the usefulness of the output – especially in the case of a collaborative dialogue – not just its accuracy. On the other hand, if your goal is research, the path is clear: verify the LLM outputs through the sources provided. But most often, your task is to discern what value the LLM's output brings to your table.

Step 4: Reflect and Adapt

Because LLMs produce language so rapidly, we are often tempted to keep up. Not only will we fail at that endeavor, but it is within the discipline of slowing down and reflecting that we can ensure a level of responsibility and safety. Ask yourself; Where has your strategy led you? Where do you wish to go next? The process is one of metacognitive decision-making, a trait shared by great writers and journalists. This continuous cycle of reflection, in conjunction with your imagination and creativity, allows you to chart the best course forward.

Step 5: Refine the Written Word

Having reflected, you return to writing. You refine your request, provide feedback, and strategically structure your language to guide the LLM toward your desired outcome. The principles of good writing—broad-to-narrow structuring, clarity of context—apply here as well. If you are already skilled in the art of communication, you will find these techniques natural extensions of your abilities.

Step 6: The Endless Cycle

At this point, working with LLMs becomes simply a question of habit and repetition. It is a cycle of writing, reading, reflecting, and writing again. To be sure, there will be some cases where the model is used as a means to an end – an efficiency-booster. But with a deeper and more purposeful approach, the user gains an awareness of its larger universe of possibilities. Understanding the parameters of what it can do – through the skills of the Humanities – will allow you to make concerted choices regarding the use of an LLM for efficiency or for depth, all the while maintaining a deeper awareness within your subconscious.

Conclusion: A Call for Practical AI Literacy

In conclusion, AI Literacy must be redefined, subdivided, and made practical if we are to teach it effectively. An approach to LLM Literacy through the lens of the Humanities will allow us not only to teach effective use but also develop writing, reading, and reflective skills that will be vital to the future health of society. We must move away from the sprawling, undefined concept it has become and focus on specific, actionable skills. Only then can we hope to integrate AI Literacy into education and, by extension, into society as a whole.