AI Literacy: Learning from Two Centuries of Reading Instruction

What Two Centuries of Reading Reform Teach Us About Today’s AI Fluency Debate

Drafted originally as an academic paper and adapted for this blog. I used ChatGPT o3 and Claude.ai to support research, copy‑editing, and image generation; all analysis and final narrative are my own.

Over the past several months, a wave of essays and opinion pieces has cast doubt on the concept of "AI literacy," questioning whether it's a meaningful or measurable skill at all. Critics argue the term is vague, inflated, or impossible to assess. But these concerns aren't new. In fact, they closely mirror earlier debates about reading literacy, which was once seen as similarly unquantifiable. This piece aims to chart a historical understanding around how the development of standards, pedagogies, and measurement metrics connected to AI literacy might mirror the development of reading literacy mechanisms.

From Bible Reading to Standardized Assessment: The Evolution of Literacy Instruction

In the 1600s, literacy instruction began with the simple "ABC method" exemplified by the hornbook - a paddle-shaped board inscribed with the alphabet, syllables, and the Lord's Prayer. The focus was primarily on enabling children to read religious texts, particularly the Bible.

Massachusetts established the first education laws in North America with the "Old Deluder Satan Act" of the 1640s, which required towns to ensure children could read to prevent "satanic deception rooted in ignorance of the Bible." This early view of literacy was strictly binary - one either could or couldn't read - with little consideration for nuanced assessment or degrees of proficiency.

For more than a century, Webster's Blue-Backed Speller remained central to reading instruction, primarily serving the upper classes as formal education was not yet widely available. During this period, measuring literacy was crude at best — often simply determined by whether someone could sign their name or read a basic text aloud, as evidenced by historical census data from the period.

A significant shift occurred in the mid-19th century when Horace Mann, often called "the father of American education," introduced new approaches to reading instruction. Mann first observed that children were "bored and 'death-like' at school” and advocated for teaching students to read whole words to engage their interest rather than tediously sounding out letters.

Students in today’s world increasingly view education as meaningless. As a student, why pay attention in class if I can ask AI to complete an assignment for me at home? If they weren’t already “bored and death-like,” they will be soon.

Early versions of AI literacy instruction—like handing students pre-written prompts to paste into ChatGPT—are the modern equivalent of rote phonics drills. There's no genuine engagement, no decision-making, no cognitive ownership. Just a mechanical process, carried out on command.

But Generative AI, used well, doesn’t reward passive use. It demands awareness. As one recent paper puts it, “Drawing on research in psychology and cognitive science, and recent GenAI user studies, we illustrate how GenAI systems impose metacognitive demands on users, requiring a high degree of metacognitive monitoring and control.”

That means students aren’t just completing a task. They’re managing a process: deciding what to ask, interpreting what comes back, and evaluating whether the AI helped or hindered their understanding.

If we recognize that reality, then the definition of “AI literacy” changes entirely. It becomes less about technical skill and more about teaching students to monitor their own reasoning—to reflect, adapt, and communicate with clarity. In short: metacognition on the page.

That shift opens the door to powerful new instructional methods. Purpose-setting prompts before the AI exchange. Structured reflection afterward. Discussions about what worked, what failed, and how their thinking evolved. Each of these techniques strengthens metacognitive habits and deepens soft skills like curiosity, resilience, and critical thinking.

For more reading on this subject, consider:

- : “Learning to prompt with a counterargument can make you smarter”

- on ’s Substack: “How talking to AI Is changing the way we work, think, and feel.”

Many of the pieces on

by .

The Development of Measurement Tools

The method is only a part of this story. Measurement is another.

Sophisticated measurement of reading literacy didn't emerge until much later. In the late 19th century, literacy rates began to be systematically recorded through census data, but these early measurements were rudimentary - often self-reported and binary (literate or illiterate).

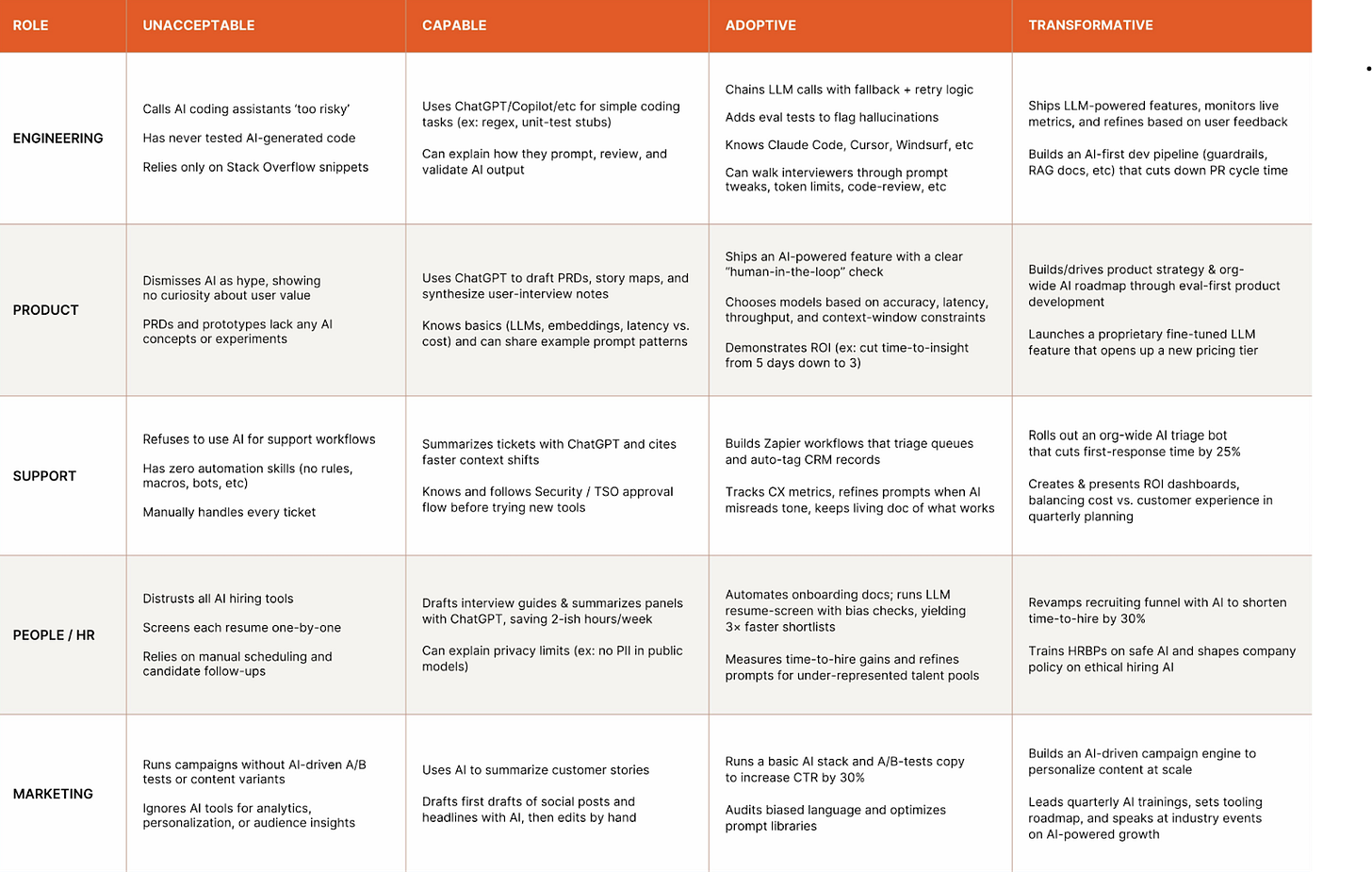

This mirrors the current measurements of AI Literacy and Fluency. In order to assess whether or not a user is “literate,” employers ask notably vague questions like, “How do you use AI in your personal life?” Frameworks like the below from Zapier provide only abstractions and vague definitions of what it would mean to be fluent.

It wasn't until the 20th century that more nuanced measurement tools for reading literacy were developed. The concept of literacy expanded beyond basic reading ability to include varying levels of comprehension, writing skills, and application of knowledge. Standardized testing emerged as the predominant method of assessment, introducing the idea that literacy existed on a continuum rather than as a binary trait.

Modern Literacy Assessment

Today's comprehensive literacy assessment involves multiple dimensions and approaches. Reading is now understood as a complex skill involving decoding, fluency, vocabulary, and comprehension. Modern assessment tools range from universal screenings and progress monitoring to diagnostic assessments and standardized achievement tests, such as the Wechsler Individual Achievement Test-Third Edition (WIAT-III).

But even these diagnostics fall short — and that is a function of the subjectivity embedded in teaching and measuring reading skills.

In my first teaching role at Achievement First Public Charter Schools in Brooklyn, I vastly underestimated the level of hands-on guidance and measurement that were required in order to produce real reading growth. In my Guided Reading classroom of six students, I leaned on “Choral Reading Strategies” as an engagement mechanism, only to be told Choral Reads would not move the needle and in fact often produced false positives.

Instead, GR teachers engaged in one-on-one measurements of proficiency that included verbal fluency measurements and verbal responses to comprehension questions in the moment. Grading the comprehension questions required experience and expertise on the part of the teacher. The spectrum of correct answers was more subjective than I imagined and involved the type of nuanced “grading” that only a human could facilitate.

Digital diagnostics were only one tool in the kit. This is why - when I advocate for evaluating student interactions with AI for metacognitive awareness and control — I always emphasize that measurement cannot be affected by GenAI systems, or any other technology. The spectrum of potential “correct” approaches to using GenAI are far too subjective and context-specific to ever be fully and completely measured by a technology tool — GenAI or otherwise.

In this vein, AI literacy closely mirrors perceptions associated with teaching and measuring reading literacy. We are in the infancy of developing robust pedagogical methods for its delivery and measurement.

And the concept of core reading literacy has continued to evolve, with the digital age introducing new literacies involving online reading, multimodal texts, and new media formats. This progression has required more than 200 years of direct instruction and research to develop concrete, quantifiable measures of reading literacy. The journey from simplistic binary concepts to sophisticated multi-dimensional assessment frameworks provides a valuable model for understanding AI literacy development.

AI Literacy: The Early Days

We are arguably only 5-10 years into the developmental journey surrounding AI literacy, fluency, or “awareness” as a fundamental skill.. The skepticism about whether AI literacy can be measured echoes historical debates about reading assessment.

Early benchmarks are emerging. The OECD is piloting an “AI‑literacy” module for an upcoming PISA cycle, UNESCO’s Guidance on Generative AI outlines teacher competencies, ISTE has woven AI skills into its standards, and Anthropic’s Creative‑Commons “AI Fluency Framework” offers discipline‑agnostic classroom activities. But, like 19th‑century reading surveys, these first drafts will need years of refinement.

The Path Forward: Learning from History

The parallels between traditional and AI literacy development suggest several important lessons:

Evolving Definitions: Just as the definition of literacy has continuously expanded from basic letter recognition to complex textual analysis, AI literacy's definition will undergo significant evolution as our relationship with artificial intelligence technologies develops. Early attempts to define the concept should be viewed as starting points, not final frameworks.

Measurement Takes Time: The development of robust, widely accepted assessment tools for reading literacy took generations. We should expect AI literacy measurement to follow a similar trajectory, requiring significant research, experimentation, and refinement before reaching maturity.

Hands-On Assessment Reality: Effective literacy assessment has always been labor-intensive. “Grading the chats” is the best way to measure a student’s “AI awareness” or fluency, but it’s hard, and it takes time. As such, it is important to separate the forest from the trees. AI literacy assessment will require personalized attention and significant time investment to meaningfully evaluate skills.

Context-Specific Skills: Both reading literacy and AI literacy are highly context-dependent. A person might demonstrate strong literacy in one domain while struggling in another. I personally witnessed students who understood fiction texts far better than non-fiction, even though both were written on the same level. This mirrors the difference in using a text generation tool versus an image generator – or using AI for productivity as opposed to using it for creativity. Fluency in one AI domain does not automatically indicate fluency in all domains.

From Binary to Spectrum Understanding: Literacy assessment evolved from a binary (can/cannot read) to a spectrum of proficiency levels. Similarly, AI literacy should be conceptualized as existing along a continuum rather than as a simple yes/no proposition.

The difficulties associated with teaching and measurement do not need to be viewed as a burden. Instead, we can see it as an opportunity to deepen our metacognition and self-awareness via our interactions with the tools. Viewing AI Literacy as “metacognition on the page” will assist in charting meaningful pathways towards greater intellectual skill and fortitude in the era of GenAI.

Moving Beyond Skepticism

By acknowledging the parallels between traditional literacy development and emerging AI literacy, we can approach the latter with patience and historical perspective. The challenges in defining and measuring AI literacy don't suggest an imaginary concept. Instead, they indicate we're at the beginning of a long educational journey.

The skeptics who question whether AI literacy is real because we lack robust measurement tools are repeating a pattern seen throughout educational history. New literacy domains always begin with unclear definitions and imprecise measurements. Over time, as understanding deepens and assessment approaches mature, these ambiguities gradually resolve.

Conclusion: Embracing the Journey

The evolution of literacy instruction and assessment provides a valuable roadmap for developing AI literacy. By studying this history, we gain perspective on current challenges and confidence that, with time and focused effort, AI literacy will become as measurable and teachable as traditional reading skills.

Rather than dismissing AI literacy due to measurement difficulties, we should embrace the opportunity to shape its development intelligently, drawing on centuries of experience with literacy instruction. The path may be long, but the destination - a society equipped to engage thoughtfully with AI technologies - is worth the journey.

This is great, Mike. The many parallels keep coming at us. We appreciate your continuing in the core pattern of grading--humanly--the chats.

I have been working on an analogy/thesis/historical pattern that's similar, and as an English teacher, you'd get it right away: fiction was our best AI before we had AI, for dealing with complex information that mattered. Interpreting fiction became an essential skill to teach for that reason. And now...

Paul Erb

Excellent work, I’ve been thinking a lot about AI literacy and you gave me even more perspective.