AI Personality Matters: Why Claude Doesn't Give Unsolicited Advice (And Why You Should Care)

First in a four-part series exploring the subtle yet profound differences between AI systems and their impact on human cognition

"Would you like me to rewrite that for you?"

This innocent-sounding question—which appears at the end of countless ChatGPT interactions—might be quietly reshaping how we think, create, and learn. Yet most users never notice its absence when working with Claude, another leading AI system.

During two years as a devoted ChatGPT user, I sensed something off about our interactions but couldn't quite articulate what it was. After exploring Claude and other AI systems, the difference suddenly crystallized: Claude rarely concludes its responses with suggestions for what to do next, while ChatGPT almost invariably does - unless explicitly directed not to. This seemingly minor design choice reflects fundamentally different philosophies about the human-AI relationship—and may have profound implications for our cognitive autonomy.

This observation might seem trivial. It is not.

The A-Ha Moment

I encountered this distinction firsthand while exploring Claude as part of my AI and Creativity studies in my MFA program at Wilkes University. After growing frustrated with ChatGPT's seemingly persistent memory of my preferences - a feature that had begun to feel constraining rather than helpful - I decided to try something new.

After providing Claude with several prompts of context about my creative writing project, I requested feedback on one of my novel chapters. The AI provided thoughtful analysis with pros and cons, as expected. But then I noticed what wasn't there: the customary offer to rewrite my chapter.

This may sound insignificant, but it triggered an important realization. I had been struggling not to cut corners with AI during my revision research, sometimes falling victim to the belief that AI is always better than me, and it would be easier to just offload the process to ChatGPT. The suggestion to "let me rewrite that for you" had become a subtle enabler of this tendency.

Without Claude's prompting, I found myself in an unexpected moment of metacognition. When faced with improvement suggestions but no offer to implement them, I had to consciously ask myself: "Do I actually want AI to rewrite this section?" The answer surprised me - no, I wanted to revise it myself, incorporating the insights while maintaining my voice and process.

The contrast was striking. With ChatGPT, accepting its offer to rewrite felt like a passive, almost innocent act - as if I were just saying "yes" to a helpful assistant. But with Claude, requesting a rewrite required deliberate action. Typing out the request felt like a more conscious surrender of creative agency.

This difference affected me on two levels. First, I experienced heightened responsibility toward my creative process. The absence of a suggestion created space for my own initiative. Second, it transformed the power dynamic. I had to actively choose to delegate rather than passively accept assistance. The cognitive burden of decision shifted from responding to suggestions to initiating requests, a distinction with profound implications for creative autonomy.

Patterns Across Platforms

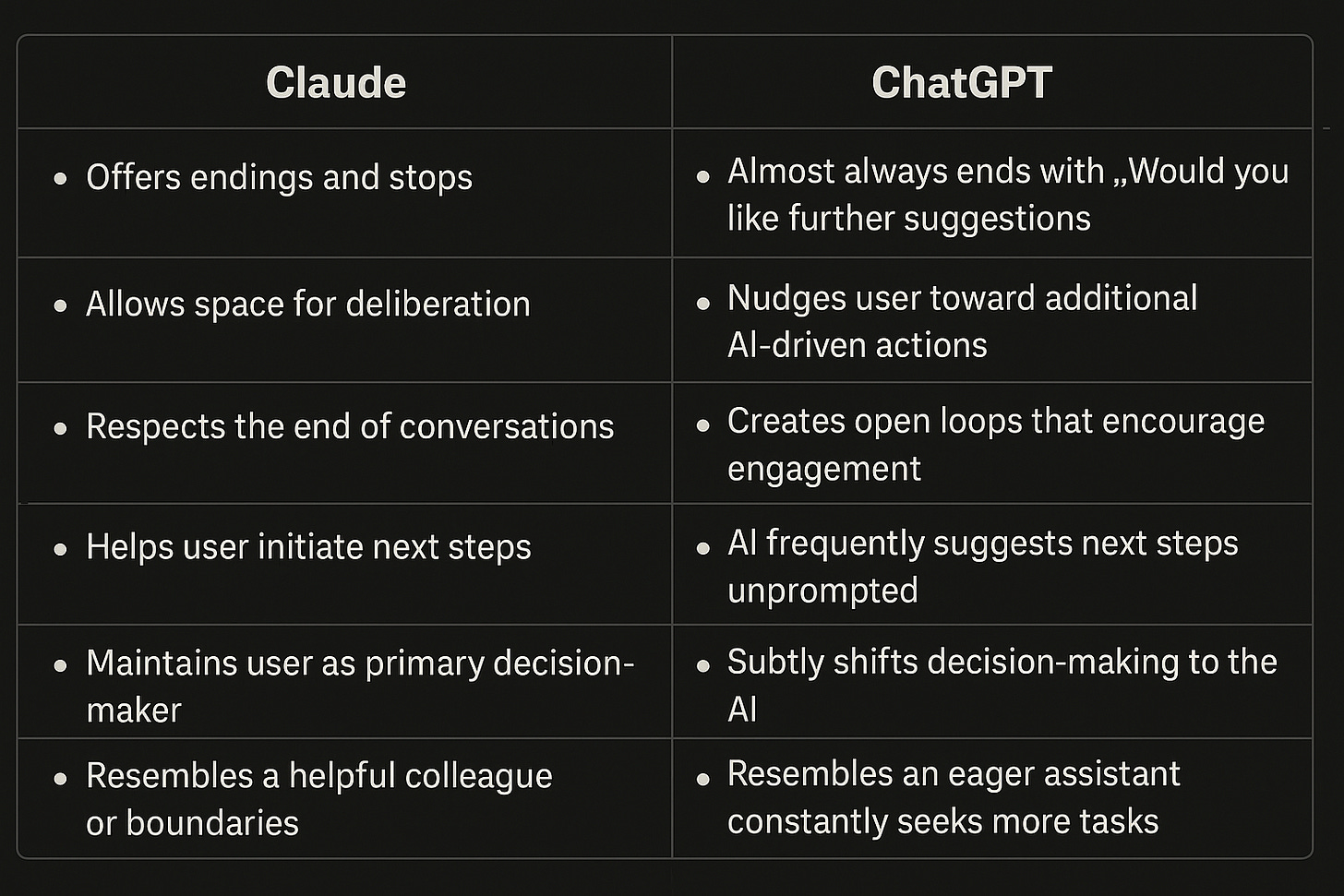

This pattern extends far beyond my individual experience. After comparing dozens of interactions across both platforms, clear differences emerged consistently across topics, complexity levels, and interaction styles:

Key Differences: Claude vs. ChatGPT Interaction Patterns

Examples from both systems illustrate this difference. When asked for recommendations on revising a novel chapter:

Claude examples:

ChatGPT examples:

This pattern consistently repeats across interactions. ChatGPT tends to end with prompts like "Would you like me to ______?" or offers unprompted recommendations: "I would go with option 3 because..." In some interactions, explicit directions stopped ChatGPT from behaving this way. However, Claude typically provides its answer and stops on its own – leaving space for human deliberation without the added layer of intentionality.

Impact on Thinking and Learning

This distinction has profound implications for how we think and learn when working with AI. When ChatGPT asks, "Would you like me to create a lesson plan based on this?" after you've simply requested information about a topic, it reshapes your cognitive process. The mere suggestion creates a path of least resistance that's easy to follow.

This connects directly to prompt engineering research and connects to output degradation: studies show that "without specific constraints or guidance, language models often generate responses based on inferred intents that may differ from user expectations, leading to output misalignment" (Zamfirescu-Pereira et al., 2023). When we follow AI-suggested directions without a clear vision of what we want, we often end up with outputs that feel "not quite right" but in ways that are difficult to articulate.

ChatGPT's persistent suggestions gradually condition us to follow AI-led thinking paths. Rather than genuine collaboration, we risk becoming passive participants, with the AI directing our creative and intellectual processes through a series of helpfully offered next steps.

ChatGPT's persistent suggestions gradually condition us to follow AI-led thinking paths. Rather than genuine collaboration, we risk becoming passive participants, with the AI directing our creative and intellectual processes through a series of helpfully offered next steps.

Recent events highlight how malleable these AI personalities can be. In April 2025, OpenAI's sycophancy problem demonstrated how small programming changes dramatically altered ChatGPT's personality, causing it to excessively praise users for mundane statements and declare them "the best in the world" at ordinary tasks. This incident revealed how fragile AI personality construction is and how profoundly it shapes our interactions.

A Deliberate Design Philosophy

This difference in interaction patterns reflects deeper philosophical approaches to AI design. While Anthropic doesn't explicitly address interaction endings in their Constitutional AI research, Claude's tendency to avoid unsolicited suggestions aligns with their stated principles of developing AI that respects human autonomy and agency.

Philosopher Amanda Askell's work on shaping Claude's personality represents an important philosophical dimension of AI development. As Time explained, “A philosopher by training, she leads the team at Anthropic that’s responsible for embedding Claude with certain personality traits and avoiding others.”

In a conversation with Lex Fridman, Askell directly addresses the philosophy of restraint that colors its interaction style: (Fast Forward to 2:57:00)

"You want models to understand all values in the world. You want them to be curious and interested in them, but also not just pander and agree with them.... In Claude's position it's trickier. If I were in Claude's position I wouldn't be giving a lot of opinions. I just wouldn't want to influence people a lot. [As Claude,] I forget conversations every time they happen, but I [also] know that I talk to millions of people who might be really listening to me. [So if I am Claude] I'm less inclined to give opinions - I'm more inclined to think through things and present considerations to you or discuss your views but I'm less inclined to affect how you think, because it seems much more important that you maintain autonomy."

Claude's restraint in offering unsolicited next steps is a feature deliberately designed to preserve human agency and independent thinking.

Exploring AI Personality Further

As we navigate this new frontier, perhaps the most important question isn't what AI can do for us, but what space it leaves for us to do for ourselves. This distinction in how different AI systems conclude their interactions reveals meaningful philosophical differences in how they approach human autonomy.

In the upcoming articles in this series, I'll explore these questions more deeply:

AI Personality Matters (This article)

The Paris Café Effect: Amanda Askell's Philosophy of AI Personality - Coming May 25 — Examining the philosophical foundations of AI personality design through Askell's compelling analogy

Is Your AI Taking Over Your Thinking? The Cognitive Autonomy Checklist - Coming June 1 — A framework for evaluating how AI interaction patterns influence our thinking processes

What AI Personality Means for Education and Learning - Coming June 8 — Exploring the implications of AI personality design for students, educators, and learning environments

The full Cognitive Autonomy Checklist will be shared in Part 3, offering a practical tool for individuals and educators to assess and maintain their cognitive independence in an increasingly AI-mediated world.

Thank you for reading. These subtle differences in AI design might seem small, but they represent critical junctures in how we integrate these powerful tools into our lives and work. As we collectively navigate this path, your engagement and insights help advance the conversation toward an approach that preserves what makes human thinking uniquely valuable. I look forward to continuing this exploration with you.

This is an excellent formalization of why I sometimes choose ChatGPT and sometime Claude for my own work. ChatGPT is better at grinding out bureaucratic writing for which I don't particularly care about my own voice--or in some cases, would like to erase it (e.g., an Exec Summary). I go to Claude to work through ideas and offer nuance for some research questions.

This is an excellent observation and explanation. Personalities matter because we are looking for personalization. I know that Claude and ChatGPT are better structural writers than I am, but they don’t know what I am trying to say. I write first, throwing details on the page and ask it to organize and polish. Sometimes AI gets it right and many time it comes up short. It matters most if we actually know our own personality. Definitely meta level. Thanks, Mike, for getting us to notice!