Flipping the Script on AI Cheating with "Linguistic Fingerprints"

Authorship determination techniques flip the conversation around AI Detection and AI Cheating on its head.

Yesterday, Wilson Tsu of Powernotes - an EdTech platform I use myself in the classroom — wrote a compelling piece on TheHill.com titled “AI cheating is destroying higher education; here’s how to fight it.”

He's got a point that's hard to ignore. Just like we had to rethink what counts as cheating when calculators and smartphones came into the picture, he says, it's time to do the same with AI.

Tsu offers a foundational question for educators to consider: “What input should be coming from the human, and what inputs can come from the AI in order to accomplish the goal of the assignment?” It's all about finding a balance that keeps the essence of learning intact in this new AI era. The way to do that is to redefine assessments.

I couldn’t agree more. Sarah Elaine Eaton explored the concept of “postplagiarism” in this 2023 paper in which she argued that most if not all written work in the future will be AI-assisted in some form or another. We, as teachers, should not be relying an AI detection software platforms that do not work (and will never work). We need to re-think what matters in the process of learning, creating, and writing.

But, there’s more.

The process of redefining assessment will take years, maybe decades. Sure, some leaders in the space, like Leon Furze, will rewrite rubrics and model new teaching techniques to help teachers recognize that AI use is likely to exist on a “scale” or a “spectrum.” But as these ideas (very) slowly bake into the education market, students are likely to misunderstand the role that AI should play in their academic lives.

That's why we need another plan of attack on AI cheating, something solid to build on. A way of establishing continuity on which we can build these new assessment techniques. Enter the concept of the Linguistic Fingerprint.

The linguistic fingerprint refers to the idea that each human being uses language differently, and that this difference between people involves a collection of markers which stamps a speaker/writer as unique.

“Forensic and computational linguists have developed methods that allow linguistic fingerprinting to be used in law enforcement,” writes Roger Kreuz, Associate Dean and Professor of Psychology at The University of Memphis. “Similar techniques are used by literary scholars to identify the authors of anonymous or contested works of literature.”

Linguistic fingerprinting was used to catch Ted Kacyznski. It was used to “out” J.K. Rowling in 2013 when she wrote a crime novel under the name Robert Galbraith. It was used in 1963 to determine the authorship of twelve essays that academics were unsure whether they were written by James Madison or Alexander Hamilton.

The point? These techniques were created and developed long before OpenAI had its ChatGPT 3.0 moment. Meaning, they don’t seek to detect AI authorship. They seek to detect student authorship.

Therefore, the question of AI detection can be “flipped” on its head. We shouldn’t be detecting AI, we should be detecting student voice.

Asking students to produce a “known document” — i.e. a written document that the school/teacher knows was written without any AI assistance — establishes a baseline for student writing on which to compare it in the future. It provides a back-up that we (teachers) can use if we feel that sneaking suspicion that a student may have used (or over-used) AI in the process of producing a written assignment.

Keep in mind, this could still be used if a teacher has allowed a specific type of AI use or assistance in the process of completing an assignment, not just as a “detection” technique but as a barometer for “how much” of the paper was not written by the student.

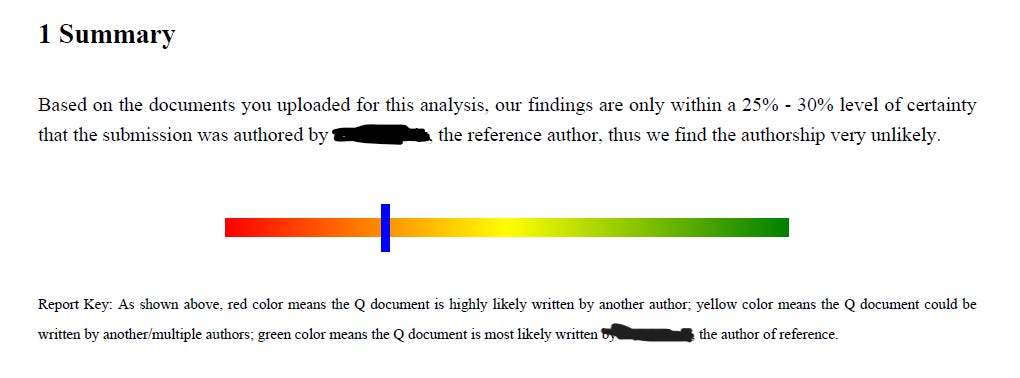

I have used this in my classroom. Below is a screenshot of a report produced via the FlintAI Authorship Determination platform. A student we will call “Student X” wrote a paper in Biology class that felt suspicious to the teacher. He brought it to me, and I ran the essay against an in-class paper he wrote in August. Flint’s platform produced this scaled report.

Going into this analysis, we weren’t necessarily convinced that the student had used AI on his paper. In fact, both the Biology teacher and I were skeptical that the student was even tech-savvy enough to know how to use AI in this way. But we both knew it didn’t sound like him. And that was enough to run a report and confirm that the writing was dramatically different from his previous writing.

In this case, “Student X” did NOT use AI to produce the Biology essay. He used his mom!

We had an unbiased report to support our thinking, in the event that the parents put up a fight when we approached the student. That didn’t turn out to be a problem, since the mother admitted to helping quite a bit, but both myself and the Bio teacher agreed it was a “nice-to-have” in the event the conversation went south.

FlintAI’s platform evaluates authorship across 13 different vectors and produces detailed analysis of each aspect. Below is another screenshot from the sample report.

(I am not a paid sponsor for FlintAI. I just believe in this approach.)

There are critiques of this approach. Namely:

We shouldn’t turn school into a cop-criminal environment just because AI showed up. Students need to know we trust them and not think we are looking over their shoulder all the time.

First, we do trust them! This is a way to make sure that if they misunderstand the role that AI is supposed to play in their work, we can flag it an unbiased way. It also helps us establish a baseline for student writing so that - as we move into an age of new assessment techniques — we have something on which to build. How else will we know if something is “Full AI” or “AI used for Ideas and Structure,” for example?

Second, school has always involved teachers and administrators disciplining and enforcing consequences on students, and should continue to do so. We can’t just change assessment techniques, we also have to change enforcement techniques. Students are supposed to learn about discipline and consequences in school. The only way to do that is to have identification mechanisms in place. This is no different than a teacher keeping an eye on their class during a test to make sure there are no wandering eyes. And, it could also be viewed as an effective deterrent.

You should want your student’s writing to change. I don’t want my student’s writing in February to be the same as it was in August!

The forensic linguistic fingerprinting approach is robust and thorough. It does not produce a “yes” or “no” but instead a scaled report across a variety of spectrums. Even a dramatic change in student writing from August to February or April is unlikely to fool this thorough of a system. I feel confident in saying that most middle and high school writing teachers would agree that student writing does not change that much in six to eight months.

And, if you really need to, you can produce another “known document” in January! Provide an in-class writing assessment and monitor student work closely for 30 minutes to an hour. You can even cut off the WiFi for a bit if you are worried about students sneaking around.

In the end, while we're all figuring out how to adjust teaching to this AI-filled world, methods like linguistic fingerprinting give us a solid starting point. It's not just about catching cheats; it's about understanding our students better and guiding them through a world where AI is just another tool in their learning kit.

(Try not to notice that this man has three legs and two of them are resting on nothing.)

As you noted with Sarah's piece, much of written text will be at least AI-influenced. The idea of a "linguistic fingerprint" kind of assumes that we can define what voice is (which I'm not so sure), or that voice is static, when it is constantly changing. Soon student voice will be influenced by AI, as well.

I would rather teach students to adapt their voice (with AI or otherwise), rather than "detect" there voice.

There is an assumption in education that writing and knowledge comes from the individual, when in reality it comes from the community ... AI simply scales this up.

Thank you for exploring this!

One thing that comes to mind is that the examples cited for success are examples where people had a tremendous amount of previous text by highly-practiced writers--that seems categorically different from people who do not consider themselves writers and/or may be early in their practice (especially given that copying is often part of a practice of learning). I'd be curious what is its rate of error, how it calculates it, and how it presents it--I know Turnitin did a lot of playing with numbers to make certain claims.