Podcast Episode 2 + Some Thoughts on Study Mode

Why AI learning tools feel powerful but might be missing the point

Before providing a short news roundup with a touch of personal analysis of ChatGPT’s Study Mode, I am delighted to share with you Episode Two of The Educator’s Dilemma - a new podcast I launched last month in an effort to share the incredible stories of educators in the field that are working towards solving the myriad questions surrounding AI in Education.

In this episode, I welcomed Dr. Maureen Russo Rodriguez, a career educator with experience across both Higher Ed and K-12 education. Maureen currently teaches language and literature at St. Mark’s School (MA) and shared with us an inspiring initiative of collaborative teacher professional development she co-founded with Nate Green of Sidwell Friends called “Co-Lab.” The free PD group meets monthly to engage in a series of guided AI Explorations in an effort to “facilitate educators’ experimentations with AI and create a space for participants from different schools to collaborate as they interrogate the power, the potential, and the pitfalls of AI use in the classroom.” To learn more, check out their website here and sign-up form here.

She also shared a preview of a forthcoming MIT Guidebook focusing on AI in Education as well as her perspectives on the current dilemma that educator’s face in responding to artificial intelligence.

I hope you enjoy this discussion as much as I did - and if you have any tips or ideas for future discussions, please reach out. I’d love to share your story with the broader community.

-Mike

On Study Mode

I told myself I wouldn't write about ChatGPT's Study Mode. Not because it isn't important, but because everyone and their mother has already weighed in with thoughtful analysis, and I didn't want to feel like I was chasing the latest shiny object. But here I am, writing about it anyway. The best laid plans of mice and men, I suppose.

For context: OpenAI, Google, and Anthropic have all launched similar "learning modes" over the past several months—ChatGPT's Study Mode, Google's Guided Learning in Gemini, and Claude's Learning Mode. These tools follow a Socratic dialogue format designed to create friction in the learning process, encouraging deeper understanding rather than quick answers and fast outputs.

Most of the analysis I've seen has been skeptical, and as you'll see below, I share many of those concerns. But I also think it's worth acknowledging that these guided learning modes represent a genuinely positive development. They make it harder for students to extract quick solutions and instead push them toward engagement through probing questions and step-by-step reasoning. Fundamentally, that's good.

That said, as I've experimented with these tools, I've noticed some limitations that give me pause.

First, they still create a fundamentally passive engagement structure. When I work with guided learning modes, I find myself sitting back and letting the bot do the driving. To use an autonomous vehicle analogy, these guided learning modes are certainly safer routes than taking my hands off the wheel of a car that consistently crashes, but I'm still not learning to drive the system - I'm being driven by it. Even when ChatGPT asks me Socratic questions, it's deciding what questions to ask, when to probe deeper, and how to sequence the learning. I'm responding, but I'm not directing.

As educators, we need to find ways to teach young people how to keep their hands on the wheel through active engagement methods. To me, AI Literacy (or fluency, or whatever you want to call it) is fundamentally about active engagement. Without it, I as the user am abdicating intellectual responsibility and/or the development of cognitive depth, which should be the core focus of our educational adaptation strategies in the coming years.

Second, how many people will actually choose to engage with AI this way? As

noted in a recent conversation, it feels unlikely that students will choose to utilize these tools when a shortcut is freely available. Said differently, human nature is human nature. Why would I take the long way when there is a short way right in front of me? And to make matters worse, if I am a high school or college student in 2025, I have that nagging feeling that my peers are taking the short way and “beating me out” in a competitive landscape - whether it be in educational environments or the workplace.However, it is true that educators could require students to use Study Mode and submit dialogue transcripts for review—and that would be valuable. But it's hard to see young people consistently choosing the long, friction-filled road when a shortcut sits right in front of them.

Third, there's the problem of a new kind of confirmation bias. When I use AI for learning, it feels like a powerful tool for accessing information that would have been much harder to understand before—similar to first engaging with the internet in 2000 (or thereabouts). It’s impossible for me to deny that this is more efficient than combing the Internet or a printed manual “by hand.” The level of personalization is powerful.

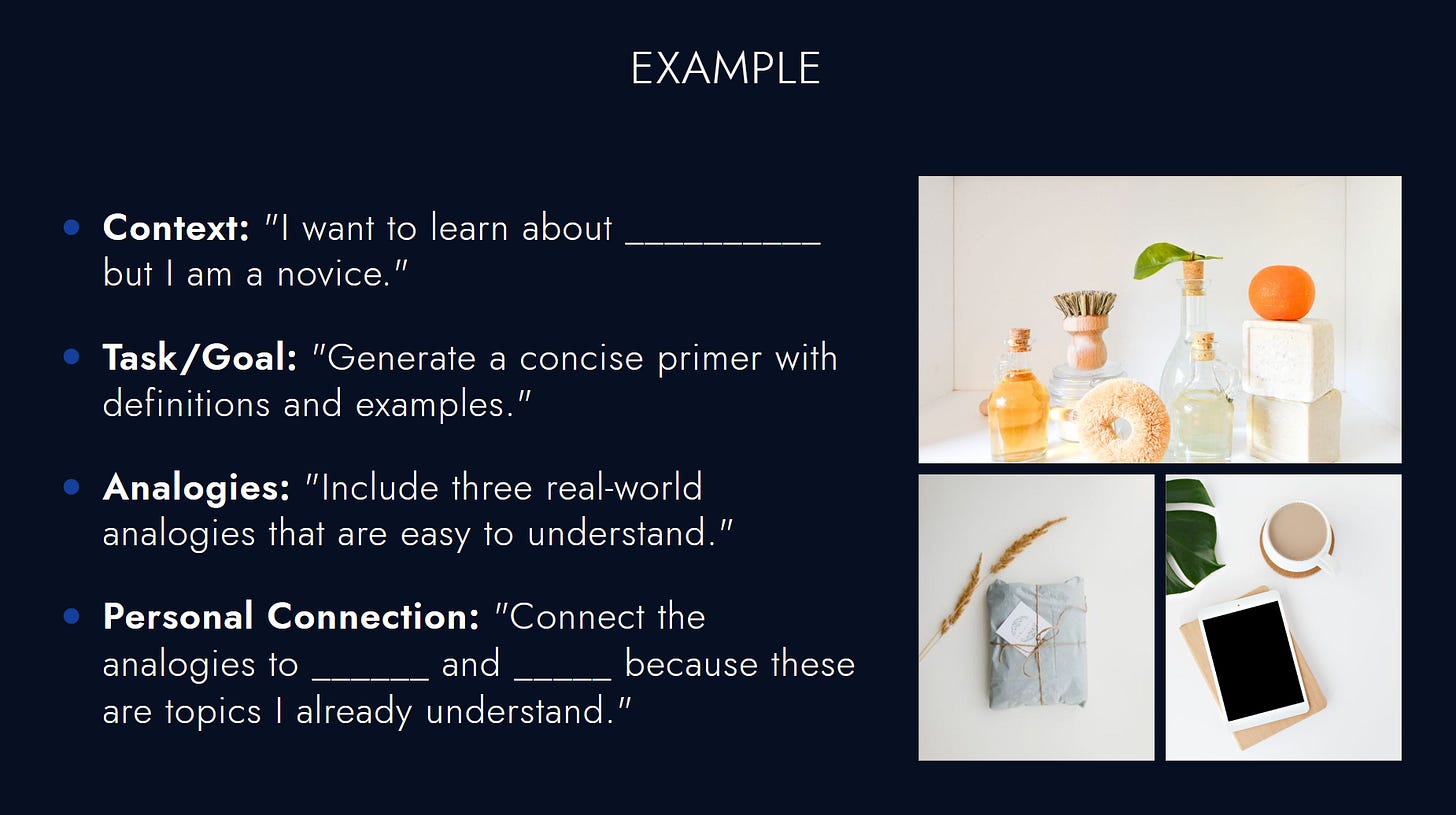

As an example, when I use AI to learn new concepts, I ask for analogies and connections to things I already understand, which gives me what feels like solid conceptual understanding. Here’s the prompt I share with educators when we discuss “AI for Learning” in a workshop I call “AI Literacy for Adults: A Humanities-Based Approach to Safe and Effective Use.”

But even in the successful cases where the analogies hit the mark and increase my understanding, there is a rub: until I put that knowledge into guided practice or get tested on my ability to explain or apply it, I don't really know it. I'm often flooded with the belief that I just learned something new when in reality I've only skimmed the surface. I wrote about this when Google first launched “Learn About,” a similarly powerful tool that may present the risk of a new kind of confirmation bias, if you are interested in exploring that concept further.

Don't get me wrong—the launch of these guided learning tools is still a positive development, as I named before. These platforms are offering self-guided learning pathways that are more personalized than previous iterations, and I applaud any effort by AI companies to reduce sycophancy and shortcutting of learning journeys.

But if there are folks out there who think this is going to replace teachers, think again.

To underscore this point, I'll leave you with a quote from Satya Nitta - an IBM veteran who worked on bringing Watson into education, founded Merlyn Mind, and recently founded Emergence. He made these remarks on a call hosted by Ed3 DAO, with whom I'm currently working to produce research on teaching in the age of AI. (More details to come soon, stay tuned.)

"Teaching will never be automated. The idea that I, as a student, can work with a chatbot to help me through homework problems, be engaged day in and day out, and actually learn—this completely ignores the fact that teaching is a deeply human process. We are a very social species. We learn from other people, and we are motivated by other people." - Satya Nitta, Emergence

That's the perspective of a technologist with 30+ years of AI research and application experience who then looked at bringing it into education. Worth considering as we navigate these new tools and their proper place in learning.

Until next time,

Mike