When "Learning About" Isn't Really Learning: A Test Drive of Google's Latest Ed Tech

A new flashy gadget is cool

A few months ago, Google invited me to their "Learning in the AI Era" event in Palo Alto on November 19th. As a solo-preneur with my first child on the way (due in April!), I decided not to attend. But after test-driving their new Learn About platform – one of the stars of that event – I'm left with some thoughts about where educational technology is headed.

Learn About is exactly what it claims to be: a tool for learning about things. Whether you're curious about earthquakes, bonsai plants, or the intricate architecture of large language models, the platform serves up helpful explainers with visuals, videos, and an intuitive interface. It's like having a particularly well-organized encyclopedia on hand, with suggested prompts to assist you in going further down the rabbit hole.

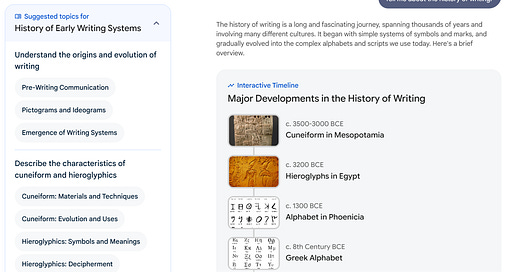

The user experience is smooth. Each topic begins with a concise overview, typically 2-3 paragraphs long with an accompanying timeline, outline, or infographic. When I ask it "tell me about the history of writing," I get a timeline and a few paragraphs about cuneiform, the first form of human writing.

At the bottom of each explanation, you'll find curated links, including YouTube videos. A sidebar offers related topics, creating a web of knowledge that is simultaneously exciting and overwhelming.

When, Where, Why, How?

This platform represents a step forward in accessing information. It could become a close cousin of Search, since each explainer serves up links for deeper analysis, or a first port of call for young researchers beginning to understand a new topic.

But the implications of supplanting our research processes with Learn About, or messaging that this tool is a game-changer for studying, are vast.

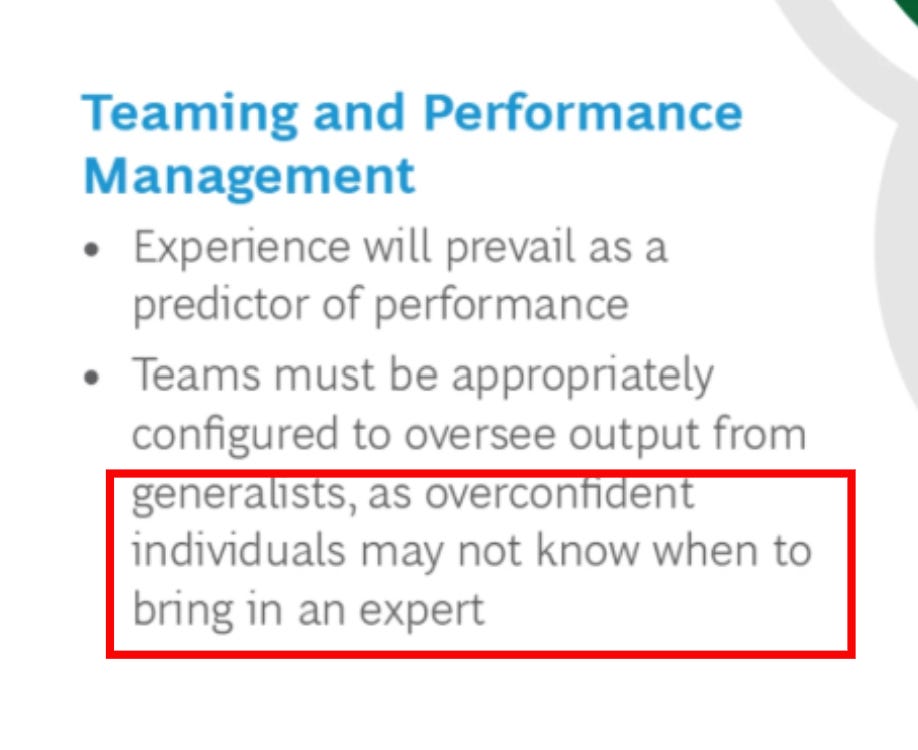

The platform is perfect for light-touch adult learning – continuous, self-directed learning for professionals who understand that learning is an intricate endeavor. Learn About could be part of an ecosystem that Boston Consulting Group researchers called "the AI exoskeleton" – a tool or set of tools that expand workers' capabilities into new knowledge areas.

But here's where things get interesting: While Google's Learn About provides an accessible entry point for exploring topics, it risks creating a false sense of expertise in users who mistake information consumption for genuine learning and mastery. BCG found this in their study; consultants who used AI for coding tasks retained no skill or knowledge from the experience.

Think about explaining a complex topic hours after reading about it. You probably stumbled over concepts that seemed clear during reading. This isn't a memory failure – it's the difference between passive recognition and active understanding.

Learn About excels at what it's designed for: providing quick, comprehensive overviews of topics. It's perfect for satisfying curiosity or getting oriented in a new subject area. But there's a subtle danger in this type of polish and authority. When information is presented this smoothly, it's easy to mistake familiarity with mastery.

I'm reminded of how social media transformed our relationship with published content. Before platforms like Twitter and Instagram, published material typically went through rigorous editing and fact-checking – not to mention some financial investment from the publishing entity. As consumers, we were subtly aware of these gatekeeping mechanisms and subsequently added value to “published content” because we knew that someone, somewhere, had vetted the content and considered it worthy of distribution into the world under their company name.

When social media platforms democratized publishing, many users continued to assign the same level of authority to content that hadn't gone through any verification process at all. This created the cognitive trap where the average social media user believed the majority of content was verified or “true” - since it appeared at first glance to have passed through the same publishing mechanisms and hurdles.

We are still unwinding the negative consequences of that development, which should illustrate the potential scope of the similar traps that Large Language Models are likely to create.

Learn About, for all its sleekness and utility, is an apt example of this type of danger. Its professional presentation and authoritative tone might lead users to overestimate their grasp of a subject after a few minutes of browsing. This isn't a criticism of the tool itself – it's more a reflection on how we interact with technology. Social media leads users to believe they are reliably informed when they are not. AI platforms could exacerbate this trend.

An Illustrative Story

My father, a lawyer, taught me about true learning. In my Middle School years, when I'd ask him to review an essay, he'd say, "Explain it to me out loud." I'd start reading from the paper. He'd stop me: "No, put it down, look at me, and explain it out loud."

I’d put the essay down and start stammering out a half-baked explanation. Before getting through more than half of my paper, he would stop me. “Okay, here’s the deal. If you can explain it out loud without looking at it, that means you know it. Once you know it, you can revise it to make sure it’s an A-paper. Go back upstairs and explain your paper to the wall - out loud. Do it until you have it down. Then come back down and explain it to me.”

This exercise taught me that true learning requires more than just consuming information – it demands engagement, practice, and the ability to reconstruct knowledge independently. The average adult understands this; but the average student does not. The risk of rolling out Learn About across the educational spectrum without surrounding it with meaningful AI Literacy and curricular design is that it creates a generation of professionals replete with a false confidence – also highlighted by BCG researchers – that cannot be unwound.

Curricular Design Example

Here's how I'd address this in a classroom. I would present myself as Mr. Techno-Optimist, the Cool Techy Teacher, the AI Guy in the Classroom. I would share Learn About and hype it up as a tool that is going to “save you hours” when studying and learning a new topic.

Then I’d give them an assignment. Research a topic of your choice for the next two class periods via Learn About. Take notes however you want. Organize information however you want. No rules, let’s see what we can learn. At the end of two days, you’ll present your findings to the class in a two-minute presentation.

On presentation day, I'd pull what is known as a ‘Project Pivot.’ A twist, essentially. I’d break the news to them that they would have to present for two minutes from memory. No notes, no visual aids.

Here’s what the room looks like after you, as the teacher, pull this type of ‘pivot.’ Blank stares from students imagining parental disappointment, angry "this isn't fair" gestures, fidgety bodies hoping to piece together something before their turn.

But after only a few struggling presentations, I'd stop the exercise. No grades would count. Instead, we'd discuss: What did presenters learn about their grasp of the content? How did others feel knowing they'd have to present without notes?

The key lessons emerge naturally:

Reading doesn't equal knowing

Learning requires application, mistakes, and practice

AI tools that funnel information aren't actually teaching

This is an example of a curricular activity where artificial intelligence tools are brought into the classroom specifically to demonstrate and show students their limitations, rather than tell them about the dangers of AI via articles and videos.

Also, Learn About is actually a useful tool, and over time kids will come to know about it whether we bring it into the classroom or not. In that sense, my argument is to get ahead of it but ensure that the first several use cases do not focus on leveraging the platform for higher efficiency, speed, or productivity. Instead, zero in on ways to deeply explore the nature of our intellectual interactions with the technology itself.

Think of it this way; We don’t just pick up a hammer and start swinging. We practice first, get a feel for its weight, analyze how and when it might cause damage, and then narrow the scope of what we can and cannot do with it. We learn, for example, that a person cannot turn a screw with a hammer, just like we should first learn that a person cannot deeply embed knowledge into their psyche with a tool like Learn About.

As an aside, this, in essence, is what I am trying to do at Zainetek Educational Advisors.

I seek to assist in curricular design of AI Literacy activities that drive home the point. AI can be helpful, but you have to know how to navigate it. If you are interested in learning more, please reach out.

Conclusion

Learn About represents an important development in educational technology. For educators and learners alike, the key is understanding the platform’s role in the broader learning journey. It can be a helpful starting point, but mastery requires moving beyond passive consumption to active engagement with the material.

The platform's name itself seems to acknowledge these limitations. They named it ‘Learn About,’ not ‘Master Everything.’ That bodes well, but it will be important to watch how they market the platform. For me, Apple has already let us down by selling the capabilities of Apple Intelligence as a way to lie to your spouse about how much you care, lie to your boss about how much time you put it into writing emails, or lie to your colleagues about how prepared you are for important meetings.

As AI-driven educational tools become more sophisticated, maintaining this distinction will be crucial for meaningful learning in the digital age.

Now I'm wondering what insights I missed at that Palo Alto event. Maybe next time, I'll make the trip.