Walk into any faculty meeting today and say the word “AI,” and you’ll likely get one of three reactions: a worried glance, a polite nod, or a visible eye-roll. It’s not that educators don’t care. It’s that they’re overwhelmed.

Across K–12 and higher education, administrators are asking a lot: “Learn generative AI.” “Try it in your classroom.” “Redesign your assessments.” But for faculty already carrying a full load, the invitation to just play around with a powerful, opaque, and risky technology often falls flat.

Here’s the real issue: we’re asking for experimentation without offering structure. Without guardrails, a sandbox doesn’t feel like a playground—it feels like quicksand.

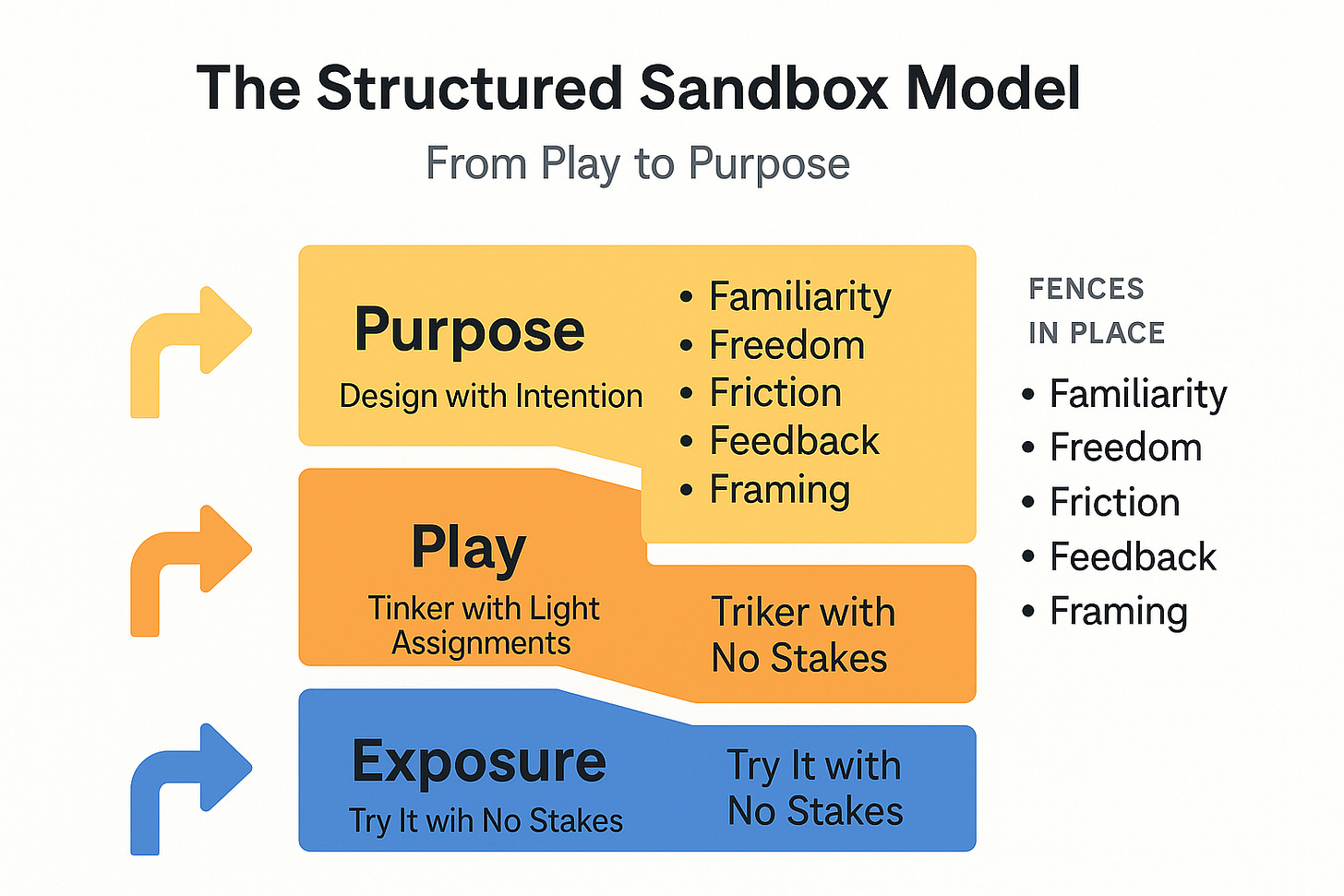

That’s where the Structured Sandbox Model comes in. It’s a framework I’ve developed to help institutions scaffold faculty experimentation with AI—not just hand them a tool and hope for the best.

What Makes a Sandbox Safe? The Five F Fences

Faculty hesitation isn’t always resistance. More often, it’s caution rooted in fatigue, uncertainty, or ethical concern. To lower the stakes and build trust, AI exploration needs to be bounded—not restricted, but structured.

This is where the Five F Fences come in—five design principles that make AI experimentation safer, more meaningful, and more likely to stick:

Familiarity – Build baseline awareness. What does the tool do well? Where does it fall short?

Freedom – Offer permission to try things without consequences or pressure.

Friction – Add light reflection to prompt awareness and avoid mindless usage.

Feedback – Gather insights from peers or students to improve next steps.

Framing – Connect AI use to course goals, institutional ethics, and learning outcomes.

Each stage of the sandbox—Exposure, Play, and Purpose—is supported by these fences. They don’t limit creativity; they protect and guide it.

Want to set up a safe, structured sandbox for your school or department?

I help schools design customized AI exploration spaces that fit your faculty’s comfort level and curriculum goals. Visit AI Literacy Partners to get started.

The Structured Sandbox Model: From Play to Purpose

This model unfolds in three progressive stages, each giving faculty a little more room—and responsibility—as they build confidence with AI. Each phase is protected by the same five fences, ensuring a thoughtful and ethical approach to integration.

Stage 1: Exposure – Try It with No Stakes

Faculty are invited to explore AI tools informally, without needing to apply them in their classrooms or justify their use.

Familiarity: Understand what the tool does and doesn’t do

Example: In a department meeting, a facilitator shows how ChatGPT and Claude summarize the same article, prompting faculty to compare and discuss the results.

Freedom: Permission to explore without consequences

Example: A faculty member uses ChatGPT to rewrite their course description in a more engaging tone—just for fun, with no pressure to use the result.

Friction: Light-touch reflection on the experience

Example: After a 10-minute sandbox session, faculty answer prompts like: “What surprised you? What frustrated you? What might this tool be good for?”

Feedback: Peer conversations about what worked or didn’t

Example: Faculty pair up and share one thing the AI got surprisingly right—or hilariously wrong—during their exploration.

Framing: No need to connect to curriculum (yet)

Example: The activity is framed as professional curiosity, not instructional redesign—allowing faculty to play without fear of evaluation.

Stage 2: Play – Tinker with Light Assignments

Faculty begin using AI in their classes in small, low-stakes ways that support—not disrupt—their instructional flow.

Familiarity: Start with known formats or pre-built prompts

Example: Faculty use a prompt from a shared library like “Summarize this article in plain English” to support a reading comprehension lesson.

Freedom: Let students choose when or how to use AI

Example: Students are given the option to brainstorm essay ideas with or without ChatGPT and then discuss how it influenced their process.

Friction: Invite students to reflect on their AI use

Example: Students complete an exit ticket after a task: “What did AI help you with? What did you change or reject? Why?”

Feedback: Collect and respond to student impressions

Example: Faculty conduct a short classroom poll asking, “Was AI helpful, confusing, or distracting?” and adjust next steps based on the feedback.

Framing: Connect AI use to skills or learning goals

Example: A history teacher frames ChatGPT as a “source to critique,” asking students to compare its summary of a primary document with their own.

Stage 3: Purpose – Design with Intention

In this stage, AI is no longer a novelty. It becomes a thoughtfully integrated component of teaching and learning—used with clarity, boundaries, and pedagogical alignment.

Familiarity: Understand the tool’s strengths and limitations

Example: A faculty member leads a discussion on the limits of AI-generated writing by showing students examples of hallucinated citations or oversimplified arguments.

Freedom: Provide structured choice in assignments

Example: In a research project, students can choose to use AI for outlining, brainstorming, or translation—but must document and reflect on what they used and why.

Friction: Embed structured reflection or analysis into assignments

Example: Students submit a short metacognitive reflection with their final draft: “How did AI influence your argument, structure, or voice? What did you accept or push back on?”

Feedback: Use peer or instructor evaluation of AI use itself

Example: A rubric includes a category for “critical use of AI,” evaluating whether the student improved, questioned, or blindly copied AI suggestions.

Framing: Align AI use with course goals and institutional ethics

Example: Faculty reference course policies, department norms, or schoolwide guidelines to help students understand what responsible AI use looks like in academic work.

With Stage 3 in place, the sandbox becomes more than a place to play—it becomes a design lab, where faculty prototype new methods of teaching, assessment, and student agency.

Conclusion: Sandboxes Aren’t Just for Kids

Faculty don’t need another mandate. They need space. They need structure. And most of all, they need support that respects their expertise while helping them grow into a rapidly changing landscape.

The Structured Sandbox Model isn’t about pushing AI into every classroom. It’s about creating the conditions where thoughtful experimentation feels possible—where faculty can move from What is this? to How might I use this? to What does good use actually look like in my context?

By scaffolding that journey across Exposure, Play, and Purpose—and protecting it with Familiarity, Freedom, Friction, Feedback, and Framing—you’re not just encouraging innovation. You’re building a culture of curiosity, reflection, and intentional practice.

AI is not going away. But with the right sandbox, your faculty might just stop fearing it—and start shaping it.

Ready to move your faculty from AI confusion to confident experimentation?

I offer workshops, sandbox design sessions, and long-term strategy support for schools navigating AI integration. Let’s build something that works for your teachers.

Play is exactly what is needed. Thanks for this post!

Hey Mike, Well-timed. I'm looking for a framework for my students to tinker with generative AI this fall. I like this one, so may very well borrow it!