Stop Skimming Chats, Start Annotating Them

The next step in the development of a transcript-based pedagogy

Every teacher knows the fear: Did my student cheat?

As AI tools like ChatGPT spread, some educators have started asking students to submit their chat transcripts—the back-and-forth text conversations they have with AI. But often this request is framed only as a way to catch dishonesty.

The result? Students grow anxious. They hide their AI use, or avoid it altogether. That’s the opposite of what we need.

Here’s the overlooked truth: chat transcripts aren’t evidence of misconduct. They’re evidence of process. They show how students think through a problem, test ideas, and react to feedback. They’re artifacts of learning—just like rough drafts, graphic organizers, or lab notes.

The question for educators isn’t whether students used AI. The question is: how thoughtfully did they use it?

The Step We’re Skipping

Here’s the problem: we’re asking students to be thoughtful about their AI use without giving them the tools to do it.

In most classrooms, we borrow methods from higher education. We expect students to dive right into analyzing, reflecting, and evaluating. But remember - college professors can assume students already know how to annotate and analyze a text because those skills were built in earlier grades.

With AI, there’s no such foundation. Everyone - students and teachers alike - is still a novice. It’s like asking someone to write a research paper without ever teaching them how to take notes.

That’s why we need to go back to the basics. In early literacy instruction, fifth-grade readers learn to annotate short stories: underline themes, mark confusing passages, track character growth. These habits build the muscle of reflection.

We need the same approach for AI transcripts. Annotation is the missing foundation. And the first step in teaching it is simple: start doing it yourself.

Why Annotations Matter

Research consistently shows that annotation practices improve student learning outcomes across multiple domains. Researchers from Valdosta State University and The University of Texas found that eighth-grade students who learned annotation strategies showed significant increases in their academic achievement in social studies compared to control groups, while researchers from CUNY-Medgar Evers College demonstrated that students who annotated reading materials produced more critically astute essays than those who simply highlighted passages.

University of Nebraska researchers note that social annotation "encourages students to actively read and engage with course materials" and "naturally pay closer attention to details, leading to improved reading comprehension.”

The connection between annotation and higher-order thinking is particularly relevant for AI transcript analysis. Multiple studies demonstrate that metacognitive strategies are directly related to developing critical thinking, academic achievement, and self-regulated learning, while recent research confirms that metacognition makes unique contributions to critical thinking ability even when other cognitive factors are controlled for. When students annotate their own AI interactions, they engage in exactly this type of metacognitive reflection.

I stumbled onto this practice in the Spring of 2024 when I sought to model a conceptual understanding of meaningful AI use in the context of an assignment I facilitated with my 9th Grade English Students. I found it so effective that I called on five forward-thinking educators to collaborate on an experimental research exhibit we called “The Field Guide to Effective GenAI Use,” since published in The WAC Repository in May.

In the exhibit, myself and five other educators submitted annotated chats of our own AI use. (Ed. Note - Please do not comment on the docs. They are meant to be exhibits of reflection rather than interactive documents.)

The goal was to demonstrate reflective metacognition in the context of our own AI use, in an effort to explore the benefit of applying this strategy in educational contexts.

, English Professor at Berkeley College and thought leader in the AI in Education space, was one of the contributors, and his reflection on the impact of this practice summed up its value more succinctly than I ever could:“The annotations wake us up,” he said.

The prevailing approach to AI use - especially among young people – is scattershot, untracked, and passive in nature. By stepping back and annotating oneself on the page, we wake ourselves up. We develop, essentially, and entirely new section of brain development devoted to a specific metacognitive act associated with our technology use.

It is transformational, at a minimum, and over the long run, I believe it can be a core element of building a new kind of metacognition that will benefit humanity in ways that are difficult to imagine or articulate in our current state.

AI transcripts are pieces of text, just like any other. They can be analyzed, annotated, and discussed using the same literacy tools we've refined over decades. The difference is that students are examining not just what they read, but what they wrote, what they asked for, and how they responded to what they received back. This creates a unique opportunity for self-reflection that goes beyond traditional text analysis.

The real shift associated with viewing student chat logs is to move from "did you cheat?" to "explain your thinking." When we ask students to annotate their AI interactions, we're asking them to make their thought processes visible. We're teaching them to be reflective users of AI rather than secret ones.

What This Looks Like in Practice

As mentioned, the Field Guide brought together several educators to contribute annotated chats of their own AI use. They include myself, Jason Gulya, Kara Kennedy,

, , and .For these chats, we annotated them in two ways:

What was I thinking as I created a particular prompt/input? What strategy did I use and why?

As I evaluated the output, what was I thinking? Why was it useful or not useful? How did it lead me to react in the moment? What do I think about this output now that I look back?

Essentially, you can think of these annotations as “bread crumbs of thinking.” This a useful umbrella for thinking about the process, but it is important to understand that the “annotation key” for any AI chat is not static — meaning, they change depending on the bot, task, assignment, and, in the case of student use, the goals laid out by the educator.

I plan to demonstrate this in future AI-based HQIMs and lesson plans that I will be releasing as part of an effort to offer “Assessment Redesign Services” to K-16 institutions. You can read more about our new offering here, and I am also offering workshops and trainings around this approach to K-16 institutions interested in exploring the concept. Reach out here, on LinkedIn, or via the website if interested.

In the meantime, here are some examples from The Field Guide that highlight the type of thinking that goes into annotating a chat.

Kara Kennedy is the leader of the AI Literacy Institute, a tutor, and program specialist at Te Pukenga. In her chat, she was preparing for a tutoring session with a student. In one annotation, she analyzes an output from a nuanced lens, walking the reader through her own thinking by explaining why a particular output was both positive and negative at the same time.

She explains (to the reader) that the bot tried to meet her request but came up short. “The student has probably already seen number lines used in class,” she notes. But she doesn't stop there - she names what she is planning to do later in the chat, creating a narrative of process-based thinking that demonstrates metacognition, critical analysis of both self and AI system, clear communication, and an explanation of her planned solution to the problem.

Later, she analyzes one of her own inputs, explaining a new approach to the same problem by using different keywords to bring the system closer to an "understanding."

As a reader, I see a narrative of thinking developing. Even further, as an educator, if I were reading a student's chat and their accompanying annotations in this type of model, I could better understand why they took a particular approach and what they were seeking to achieve.

Subsequently I could provide feedback not just on “AI use,” but also on problem-solving, communication, critical thinking, and more. The chat is simply a playground for soft skills – and, occasionally, subject matter expertise too.

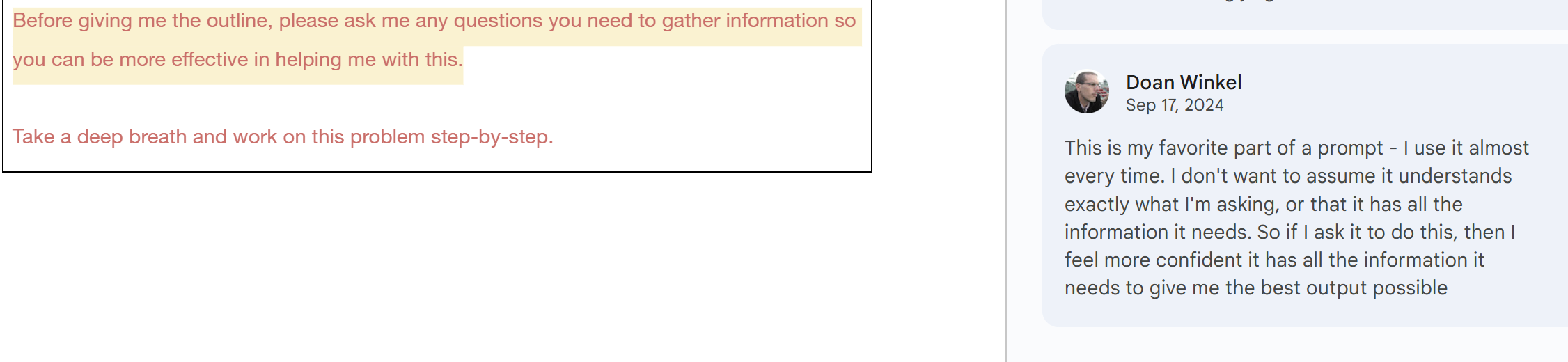

Doan Winkel is an Entrepreneurship Professor at John Carroll University. He submitted a chat where he is planning out an activity for his class. In his annotations, he explains his approach in the opening prompt. Doan asks the AI to ask him questions about the plan/project, a strategy that was not well-known in prompting circles a year ago, but has since become more common.

He explains that asking AI to ask him questions helps him to feel more confident that the system has all the information it needs to give him the best possible output. As the reader, not only do I get to understand (via the annotations) why the user approached AI this way, I get to see the depth of their reflection about their own approach. If I read this from a student, I would feel increasingly confident that they were slowing down and “thinking through” the engagement in an active manner, rather than passively allowing the system to be in charge.

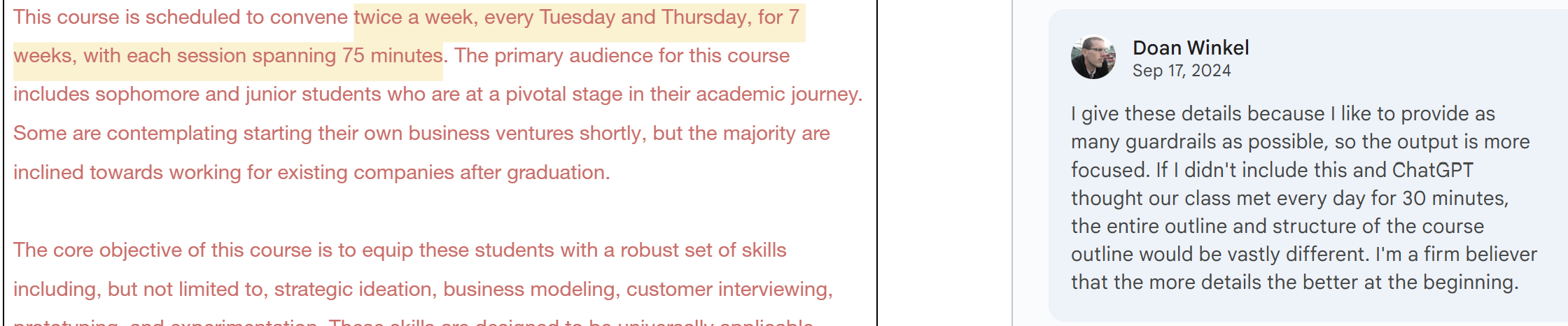

Below, Doan acknowledges there is more than one way to approach prompting. He names that he is a "firm believer" in providing more details up front. The key in his reflection, in my opinion, is not that "one way is better than the other," but the fact that the user on the page can explain why they took that particular approach.

He names that if he didn't include this additional context, then the entire structure and outline of the course would be vastly different and potentially worse. Again, it’s not about whether I as the reader agree with that statement. Instead, it allows me as the reader to see an awareness of the potential pathways that exist within an AI interaction — as well as a notable level of discernment with respect to the engagement itself. In student terms, it would be exactly what we want.

One misconception of this exhibit when I first published it was that we were trying to teach people how to prompt. That’s not the case. Instead, the exhibit aims to provide models of a reflective metacognitive act associated with AI use that could be employed in one’s own work or with students directly.

If this concept feels intuitive to you, I suggest trying it out with one of your own chats before asking your students to engage in this process. We develop the skill by first engaging ourselves, analyzing our own and each other’s annotations, and subsequently passing the skill onto students.

Nick Potkalitsky was a High School English teacher at the time of this chat and annotations. He is now an AI Specialist at the Educational Service Center of Central Ohio. In his annotations, Nick leans into the ambiguity of working with AI, choosing to leverage the gaps and limitations that are inherent in any GenAI interaction to reveal new insights associated with the teaching of writing.

Here is an example wherein he annotates and explains one of his own inputs:

As a reader, I am struck by the depth of this analysis. Were this a student, I would consider it to be a demonstration of a unique relationship to the system that could deepen my understanding of the user/student’s thinking process. It could also create valuable discussion opportunities, at a minimum — and perhaps much more, were I to endeavor to go further. Said differently, it’s a map of the brain.

To be sure, you might not expect your students to reach this level of sophistication, but you might also be surprised. From my experience, I was blown away by what my students put into the chat. Their annotations and reflections were similarly eye-opening. I can honestly say that I had more fun grading these assignments than any other in ten years of teaching.

In Sum…

Annotated chats are a new artifact of student thinking in the age of GenAI. Yes, a chat can be graded. If you have been following this blog for some time, you know that I seriously believe in that concept.

But guess what it is even better than a gradable chat? An annotated chat with explanations of process. Even if the student fails to exhibit the level of thoughtfulness in the AI chat that we might have sought, they can “make up for it” on the back end by explaining their thinking.

If that is not “process over product,” if that is not a mechanism for increasing friction in the learning process for this era, I truly do not know what is.

Caveat Emptor: I do not believe that this is an activity that should be facilitated every day, every week, or even every month in classrooms. In my own experience, once a semester is plenty. And, there is quite a bit of design and prep time associated with it for both educator and student, so it requires a layering process to ensure that all parties are on the same page.

But once you get there, students have to answer the real question when it comes to AI use — can you explain your thinking when you were using AI?

What's Next

Here are my takeaways from two years of evaluating student chats, evaluating annotations of chats, grading chats, and more:

It is crucial that we move towards the development of new pedagogical approaches to respond to the risks, dangers, opportunities, and benefits of AI in education.

AI chat transcripts are pieces of text that can be evaluated just like any other – and should be. As I wrote here, transcript analysis should become an entirely new field of study.

Early-stage literacy instructional methods are easily applicable to transcript analysis. Higher Ed professors, in particular, are "skipping steps" when bringing AI into the classroom.

The ability to reflect on our own AI use is, in my opinion, the single-most important skill that human beings should build to preserve humanity, cognition, and creativity – while also becoming "better" AI users via the insertion of friction into the process.

An ability to explain one’s thinking within an inherently subjective framework requires a close connection with one’s own thought processes and approaches.

This skill is not static and can be developed through an iterative and cyclical framework.

Every user of AI should try annotating their own chat. That includes us as educators. If you are sensing an intellectual obstacle to teaching this practice, try it out on your own AI interaction.

Recommended Approach: Pick a chat from the not-recent past. This creates an appropriate amount of cognitive distance. And remember, do not judge yourself – you are not annotating for right and wrong. This is not a moral or ethical consideration. Your goal is to see if you can explain your own approach to an invisible reader.

And if you are looking for more creative motivation, imagine that your chat and its annotations will be read/analyzed by someone you respect. This helps to create the intrinsic motivation to "show out" on the page.

The methodology you've just read about - annotating chats, grading AI interactions, building metacognitive skills through AI transcript analysis - has been my passion for the last two years. Only a few months ago, Aimee Skidmore and I executed this multi-step framework in her 12th Grade English classroom and are excited to share the results when the time comes.

But this work is now available to educators and institutions ready to move beyond asking 'did you cheat?' and toward asking 'explain your thinking.' I've launched Assessment Redesign Services, Pedagogical Coaching, and Research Consulting built around this 'grade the chats' methodology — with help from leading classroom teachers that are experimenting with AI with their students right now. These services are context-specific, collaborative, and designed to help you implement what works in your specific environment.

Whether you're an individual educator ready to pilot annotation practices or an institution looking to redesign assessment for the AI age, the support is here. Learn more here.

Just like a year ago it was time to "stop grading essays, start grading chats," now it is time to "stop skimming chats, start annotating them."

This reframes transcripts perfectly, not proof of misconduct, but artifacts of process.

In my cybersecurity & AI courses we annotate chats as if they were audit trails provenance (model/version/source hints), chain-of-custody (what moved from AI → student work), and a short refusal-with-reason note before acceptance. It turns metacognition into governance literacy.

If you had to choose one beginner tag that changes student behavior fastest provenance, refusal-with-reason, or risk-of-misuse which would you start with?

Thanks for developing and writing up this approach. I work with advanced undergraduate and graduate students who are integrating NotebookLM and other AI tools into their learning and research. After reading your article and following several of the links, I'm going to try the annotation approach with them, to help them take greater agency in their role in shaping the iterations more deliberately.