Grading the Chats: The Good, The Bad, and The Ugly of Student AI Use

Analyzing student interactions with AI to define "effective use"

In an effort to spice up my explanations of the “grade the chats” approach, I have decided to lean on the occasional corny pop culture reference — like the one you see above to my father’s favorite spaghetti western film. Humor me!

This series will look at three sets of student AI transcripts. I will provide snapshots of their AI use and provide an informal review of their approaches, writing, and responses.

The first post will focus on “the good” examples of chat transcripts from my students over the last year. The second will focus on “the bad.” The last won’t really focus on “the ugly,” but rather the “gray” area where students made efforts to interact with AI the right way but struggled. I would have changed the title, but I assumed “The Good, The Bad, and The Gray” would have been less catchy.

As we analyze these transcripts together, I would ask you to consider whether or not the chat transcript is actually a rich set of data on student thinking — not just an image of their ability to engage in “prompt engineering.”

So without further ado, let’s take a look at the assignment context and some of the student AI use that the assessment produced. Hopefully, as I analyze these clips, nothing interferes with my aim.

Assignment Context

Last February, after my Freshman Honors English classes finished reading Romeo & Juliet, I created a mini-unit on prompt engineering. I taught them some basic approaches and demonstrated how I would write prompts in the context of a given objective.

Then, I provided them with an exemplar and non-exemplar chat transcript on a topic we had already studied and asked them to analyze each transcript via a set of guiding questions. From there, we drew our own conclusions about what worked and didn’t work. This step was helpful because it allowed students to see with their own eyes the difference between prompting thoughtfully and treating LLMs like traditional technology.

Then, I asked them to create a list of “bucket list” items or lifelong goals. After discussing them as a class, I asked them to use ChatGPT to come up with a plan for achieving one of those items in the near future. Their goal was to use the LLM thoughtfully and in the ways I had outlined. After an in-class session, they submitted their transcripts for feedback on their approach.

Satisfied that my students were ready for their first “real” foray, I designed a project-based learning activity to be as broad and ambiguous as it possibly could be. I wanted to give them a as many options as possible so that they would not know where to start. This way, when they invariably had a large number of questions, I could logically point them in the direction of ChatGPT as a brainstorming partner.

Lastly, I told them that I would evaluate their interaction with ChatGPT for an assessment grade. Their mission was to follow my rubrics and instructions for AI use to ensure they were developing the right habits.

The below screenshots are examples of how they used the LLM in “the right way.”

The Really Good

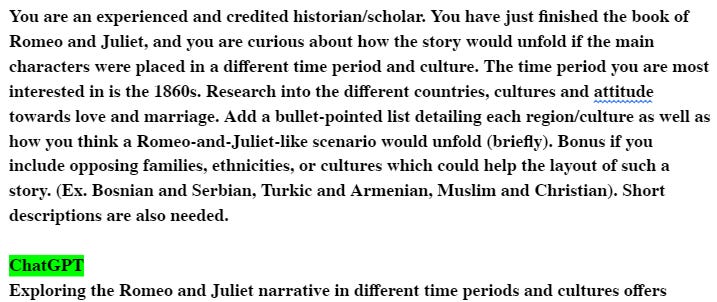

Let’s follow the checklist of “good” things this student did:

Created a role for the LLM that fit the task and objective.

Provided a specific time period for the LLM to consider. This immediately narrows the corpus from which ChatGPT will pull, tending to make it more effective.

Asked ChatGPT to do research(!). The student is not assuming that the LLM “knows everything,” as many of us tend to do. This creates a step-by-step plan for the LLM that starts at the beginning.

Named the desired format for the LLM output (bullet points).

Provided user-generated options for ethnicities and religions that typically experience conflict, showing some initial brainstorming on the part of the student.

*Chef’s kiss*

You could argue this student included too many requests. For example, they asked for both a bullet-point list detailing each region/culture and also how ChatGPT thinks a Romeo-Juliet scenario would unfold. If I were giving feedback to this student today, I would tell them to pick one or the other. Too many requests can occasionally short-circuit the brain behind the LLM. But on the whole, this initial prompt is very strong, in my estimation.

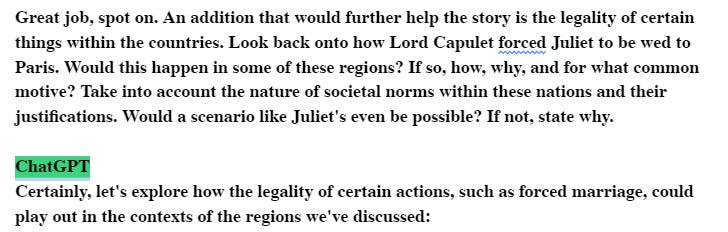

Here’s his follow-up to a set of ideas provided by the bot.

The first positive I notice is that the student follows on logically from the response, which tells me that they read carefully and thought about their next prompt. Many students do not do this, and it is obvious by the way they respond. Or, they pivot sharply in ways that can throw the bot off.

Second, the student offers the possibility that the scenario they are considering together might not even be feasible.

This proves to me that the student is not assuming anything about the veracity of the bot’s output. They are even demonstrating a recognition of some potential holes in the first set of ideas provided by the LLM, which is evidence of critical thinking. This is also a magnificent example of a user recognizing that their own agency and using domain expertise to ensure that their experience using the LLMs are productive and responsible.

The Good

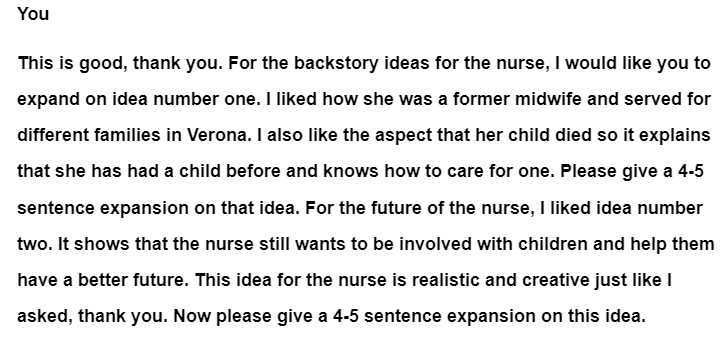

The next student decided to generate a “backstory” for the character of The Nurse in the play. ChatGPT provided a series of options for the character’s backstory from before the play, and my student followed up like this:

A lot to like here:

The student uses the “Outline Expansion Method” to ask for more detail on “idea number one.” This shows some critical and creative thinking, because the student is evaluating the responses and being thoughtful about what to use or discard.

The student explains what they “like” about each idea. This type of feedback trains the LLM to assist in more productive ways. More importantly, it shows analysis of the LLM response and subsequent articulation of their own thought. They are stopping to think which option might be best and then purposely articulating their choice to the bot. The only reason this does not fall into the “really good” category is because the student did not explain why they liked what they like.

The student remembers that The Nurse had a child who died in the play. This is a demonstration of content understanding that lives within the context of the chat.

The student also shows an understanding of story structure and the logic of a plot. They make a concerted effort to pick the LLM-produced ideas that follow most logically from what occurred in the play, rather than involving aliens and spaceships because it’s fun.

The student provides instructions for the format of the response (4-5 sentences.) This is prompt engineering stuff that is only marginally relevant for the development of writing and critical thinking skills in the chat. Plus, it will probably be obsolete once next-gen models are released.

Similar to our first set of student prompts, you could make an argument that this student asked for too much. They asked for elaborations on both the backstory and the future story. However, had they focused on backstory and then tried to prompt the LLM to “remember” what it said in its first response about the future story, they may have run into a different set of problems, like confusing the bot. So in this case, I think it is okay.

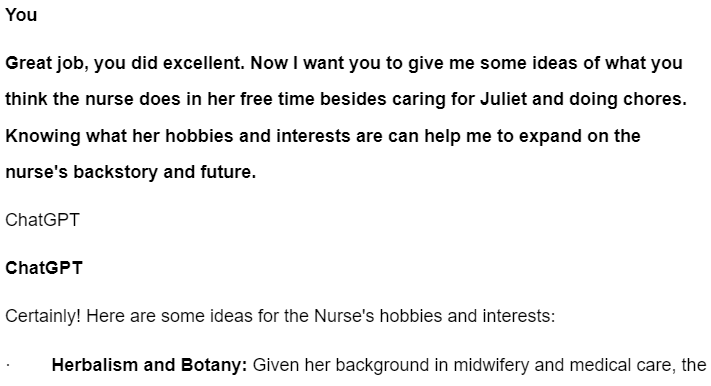

Here’s one of the follow-ups.

This is not bad. On one hand, I love the fact that the student is asking for even more character detail. It means they understand the nature of what makes a rich story and a good character, which shows understanding of the task and objective.

But, the student assumes that ChatGPT “knows” The Nurse’s hobbies.

This may have been a slip of the tongue, but it also may represent a misunderstanding of the way LLMs work. If this student were standing here with me today, I would say, “All ChatGPT knows about The Nurse comes from the book itself, just like you. It does not ‘know’ anything else, especially because the Nurse is not real, though it is going to be very good at brainstorming some ideas of which hobbies she might have enjoyed.’”

The Pretty Good

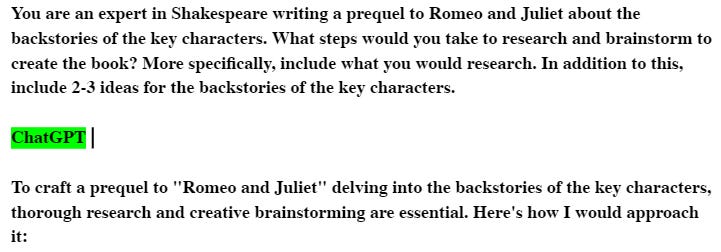

One aspect of this prompt is quite good, actually, because the student starts by asking ChatGPT for “steps” rather than a set of ideas. They want to know what the engine would research if they were going about creating this project. Similar to previous students, this student is starting “at the beginning,” rather than assuming that ChatGPT magically knows every answer.

However, the student somewhat awkwardly then asks for “two to three ideas for the backstories” at the end of the prompt, somewhat negating and confusing the sequence that was about to unfold.

Interestingly, and partly as a result, this chat went south….very south….quite quickly. The student logged that too many requests in each prompt and the system became very confused. Have you ever asked a human to answer five different questions at once? Yeah, it doesn’t work so well.

In the end, the LLM missed the target badly and, somewhat ironically, caused serious frustration in the student.

Been there!

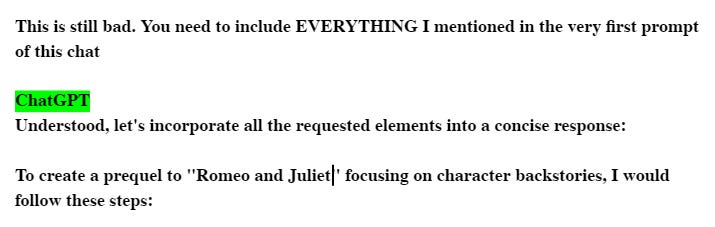

In this very emotional prompt, the student makes the mistake of asking ChatGPT to go back and answer EVERYTHING from the first prompt. This is a little bit like Abbott and Costello going back-and-forth with each other during their “Who’s on first?” routine.

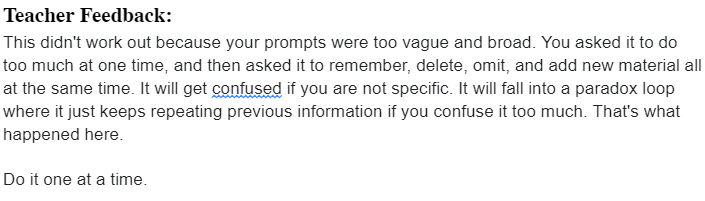

Now, LLMs have improved quite a bit since February 2024, but I would argue this would still not be a great strategy. Here is the feedback I provided to the student:

Hopefully I wasn’t too harsh.

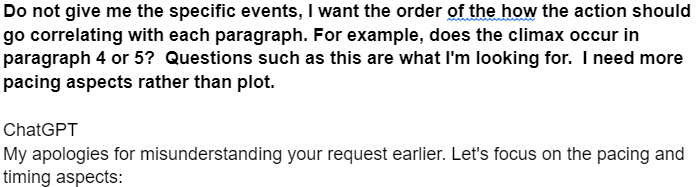

For fun, here is one final student clip that demonstrates how the above student should have corrected the LLM and brought it back into the fold.

The student is specific regarding how the LLM can course-correct. The student does not rely on the LLM to reflect on its own work and magically correct its initial mistake.

This is also a great example of metacognition. After reading the output that “missed the mark,” the above student had to stop and re-analyze their own goals and objectives — their purpose — to determine what the LLM misunderstood. They had to re-determine what they were seeking and use language to specifically direct the bot in the right direction.

In this way, the student takes ownership over the chat, rather than expecting the bot to do everything. It might not seem like it, but this is actually very good stuff.

Conclusion

Hopefully, this demonstration shows that the chat transcript is in fact a rich landscape upon which a user demonstrates a variety of different types of understanding, or lack thereof. Furthermore, this type of analysis is what leads to a much deeper, more nuanced understanding of working with an LLM.

I’ll sign off with one final student clipping, the type that brings a smile to your face after hours of grading on a weekend afternoon.